Small vs Large Test: Which One Is Ideal?

In This blog

In the modern world of software testing, when methodologies such as Agile and DevOps emphasize the importance of fast delivery, companies are looking to increase efficiency in every department. QA is no different. Many software testing managers try to identify the ideal size for their tests in order to improve their effectiveness and not waste time on tests that do not produce value. So the prevailing question is, which test size is ideal?

What Affects the Size of the Test?

There are many variables that influence test size. Obviously, the scope and depth of your tests including the functionalities and behaviors covered in each test define the test size. But it’s much more than that. The type of application your team is testing, the development methodology your team is following, and the subject matter knowledge of your testers are also crucial factors.

The combination of these elements allows managers to plan their organization’s testing efforts in a way that provides the most value. It’s important to note that there is no one-size-fits-all approach to testing. Each organization has different needs and team structures, and prioritizes different products and applications.

Test Size Trade-Offs

There are some trade-offs when it comes to test size that managers should consider before assigning tasks to their team and executing tests:

Test Granularity & Coverage

The size is directly related to the scope of your test. Small tests mainly focus on specific single functionality or area within the software that testers want to ensure are bug-free and working as intended. A unit test for example, is the first and smallest test which aims to check the code of a single unit, one after another, to verify the code is clean.

Large tests on the other hand, cover wider areas of your software by checking if multiple functionalities are working together seamlessly. Tests such as User Acceptance Testing are large and comprehensive tests that focus on determining if the software works properly as a whole in a real-world environment by end-users.

Test Granularity & Coverage

Another important trade-off that test managers need to consider when determining test size is the execution and maintenance of the tests. Smaller tests offer the advantage of being quick to execute and complete, allowing for faster feedback on the software’s functionality. Moreover, these smaller tests can be easily reused in future testing cycles, providing better efficiency and cost and time savings as they cover specific software components or functionalities.

Large tests are more complex and time-consuming to perform. They often require a higher level of effort and resources for both execution and maintenance. Especially as the software evolves and changes are made, maintaining and reusing these tests becomes a big challenge.

Best Practices to Determine the Ideal Test Size

Just to be clear here, both types are crucial for the success of your software! Test managers must strike a balance between the benefits of faster execution and low maintenance effort by smaller tests and the comprehensive coverage provided by larger tests. Still, there are some best practices that can help testing teams in most testing approaches:

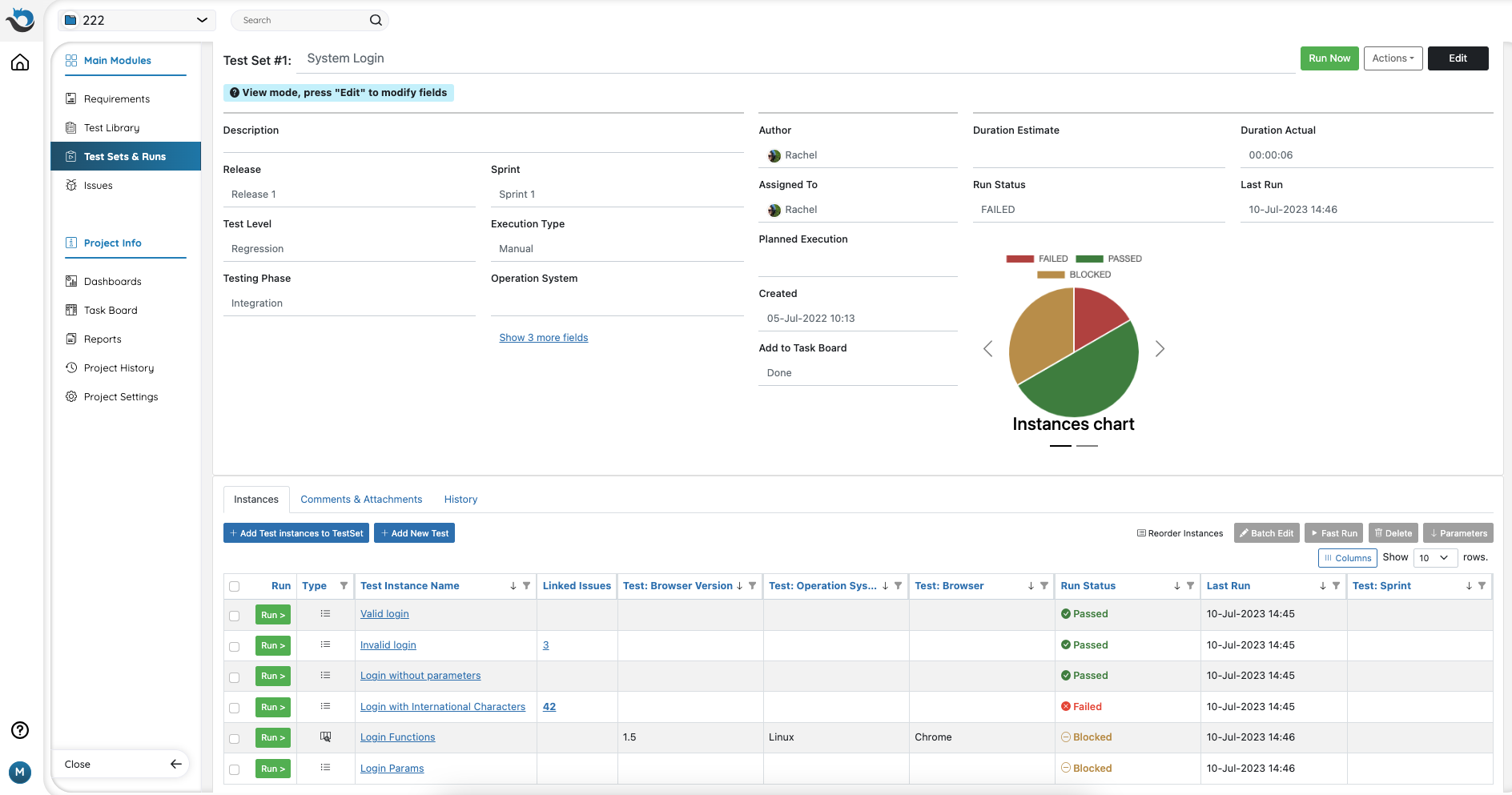

Plan Your Tests as Reusable Blocks

The first tip we suggest is to leverage the power of test case reusability to save valuable time and effort. To make your tests reusable, make sure they cover small and simple pieces of functionality which will be easier to reuse. If you are using a test management platform such as PractiTest, you can group several tests together in order to generate a test set that covers more complex scenarios of related functionalities.

Keep Your Tests Short

A testing session should not last more than 60–90 minutes. After this time the human brain begins to tire and becomes less effective, meaning that your testers may miss some of the issues in your product.

When planning tests, take into account that you want each atomic test to last less than this time. Ideally (following up on the section above), you should strive to create tests that last between 15 and 45 minutes and plan your testing session to cover 3, 4, or 5 of these tests.

In any case, if you have a test with over 40 steps, it’s a good idea to review it and consider breaking it down into several smaller and more modular tests.

Write Steps Depending on the Knowledge Level of Your Testers

Unless you are working in a regulated environment where you have to work with very low-level test steps and scripts, try to match the depth or level of your steps to the knowledge and level of your testers.

For example, if your testers are experts and power users of the application they are testing, you may want to work mostly with step names and leave the step description empty. This is because it may be obvious to them how to perform the specific task described in the step name. On the other hand, if your testers are not experts, or you want them to perform the operation in a very specific way, then very low-level step descriptions may be a better option.

Most of the time, you will want to do something that falls in the middle between these two extreme examples.

Create Guidelines for Writing Tests and Steps

Writing tests is something very personal. Every tester, if left up to them, would write tests in a different way depending on what they know and prefer. If the test styling approach is left to each tester, you will get individual tests that are difficult to combine in order to create good test sets.

To achieve homogeneity in testing, it is recommended to create guidelines for your testers. These guidelines need to match the needs of your team and process.

Examples of guidelines include:

-

Creating steps that cover only one screen at a time

-

Writing tests that have between 5 and 15 steps

-

Ensuring that information to perform your steps, if you have more than one set of data, store it as attachments to the step

Combine Automation in Your Testing Efforts

Automation has become a fundamental aspect of testing efforts as many organizations rely on different tools to achieve faster test execution. Tests that are time-consuming or prone to human error can be well executed by automation tools and deliver highly accurate results in a short amount of time. Among the types of tests automation widely used are performance tests, stress tests, and regression tests.

It is important to note that all of the above should not detract from the importance of manual testing. Manual testing offers flexibility and adaptability, and is suitable for testing scenarios requiring a human eye and intuition such as exploratory testing. Managers should find the right combination of manual and automated testing in order to improve their testing efficiency and cover both small and complex tests.

Summing Up

In conclusion, test size plays a significant role in software testing. While smaller tests offer the advantages of faster execution and reusability, larger tests provide comprehensive coverage. Test managers must strike a balance between small and large tests, creating guidelines for writing tests, and planning the test based on the testers’ knowledge.

Managers should use automated testing in the right tests to increase efficiency and shorten testing cycles. The most important thing to remember is that by using a modular approach, you will be able to save valuable time in writing, managing, and maintaining your tests, ultimately optimizing software testing for your organization.