Introduction

System testing is perhaps the single most important level of testing because it involves testing all the key elements of a system – software, hardware, documentation, data, networking, and how people interact with the system.

No other level of testing is this technical or encompasses this scope of testing.

This is not meant to imply that other levels of testing such as unit testing, integration testing, and acceptance testing are not important. It is just that system testing is where all key elements are tested together as a working technology solution.

Table Of Contents

- What is System Testing?

- The Nature of Systems

- System Testing in the V-Model

- Why Perform System Testing?

- When Does System Testing Occur in Software Development?

- What is System Testing in Software Engineering?

- System Testing in an Agile Context

- Who Performs System Testing?

- Which Types of Tests Occur in System Testing?

- Testing in a System of Systems Contex

- sub-system-testing">Sub-system Testing

- Risks and Challenges of System Testing

- What is a System Test Plan?

- Conclusion

What is System Testing?

System testing is a level of testing that verifies that stated requirements or other specifications (use cases, user stories, etc.) have been met at an acceptable level of quality.

According to the ISTQB Glossary, system testing is “A test level that focuses on verifying that a system as a whole meets specified requirements.”

As mentioned in the introduction, system testing encompasses more than just software. System testing also includes hardware, documentation such as procedures, data, networking, and people (Figure 1). All of these must work together to achieve the desired result.

Figure 1 – Systems Components and Their Interactions

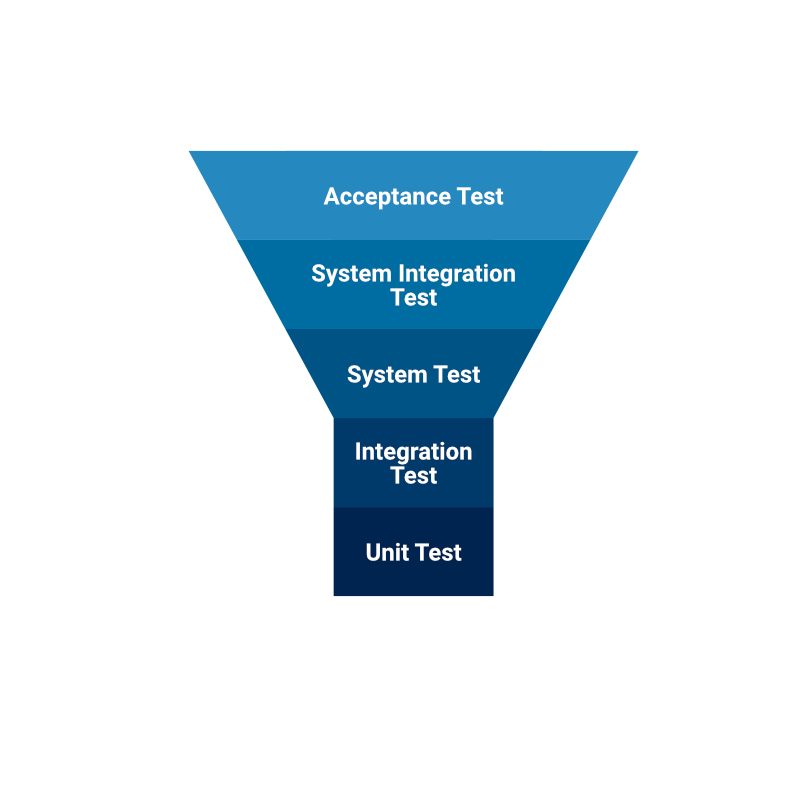

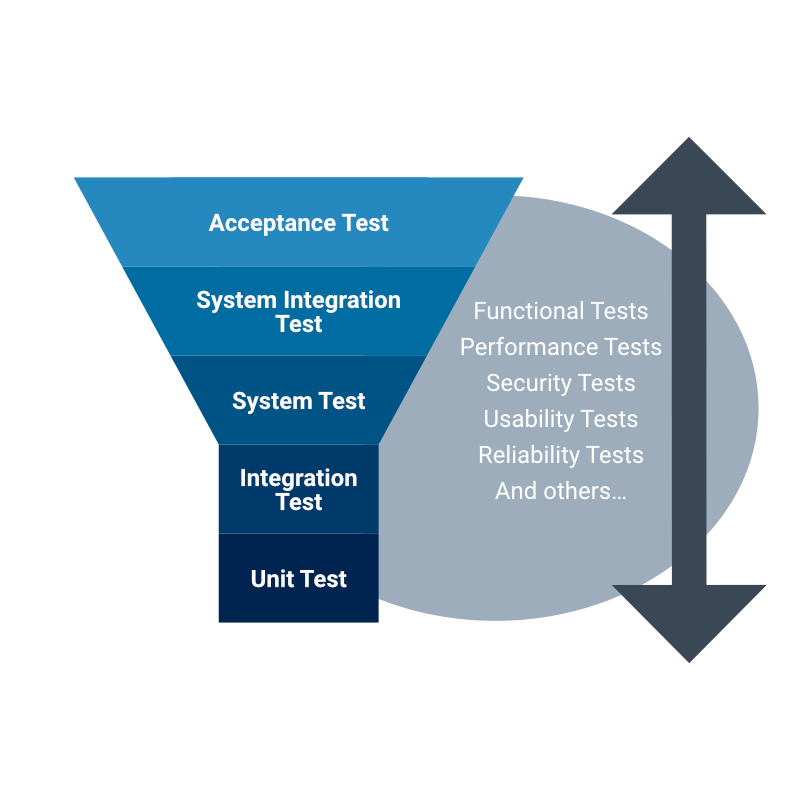

There are two major ways to see system testing – by scope, and by when it occurs. To visualize the scope perspective, in Figure 2 we see a funnel-like image where the smallest tests (unit or component tests) are at the bottom because of the small scope. At the top of the funnel is acceptance testing because it can span systems and organizations.

Figure 2 – System Testing Scope

System integration testing is just below acceptance testing in the funnel, and system testing is just below that.

It is important to understand that while the levels of testing should build on previous levels of testing, it is possible to go directly to the high level in some cases. For example, in maintenance testing or testing vendor-developed systems, system and acceptance testing may be the first opportunity to test.

The Nature of Systems

When we think of any type of system, such as the cardiovascular system in humans or transportation systems, just to name a couple, we see the idea of interconnected parts all working together to achieve specific objectives. If a critical component of the system fails, the entire system is subject to failure.

It is interesting that in the U.S. Food and Drug Administration Glossary of Computer System Software Development Terminology, a system is defined as “(1) (ANSI) People, machines, and methods organized to accomplish a set of specific functions. (2) (DOD) A composite, at any level of complexity, of personnel, procedures, materials, tools, equipment, facilities, and software. The elements of this composite entity are used together in the intended operational or support environment to perform a given task or achieve a specific purpose, support, or mission requirement.”

So, we can clearly see a system is more than just software, and system testing is more than just software testing.

System Testing in the V-Model

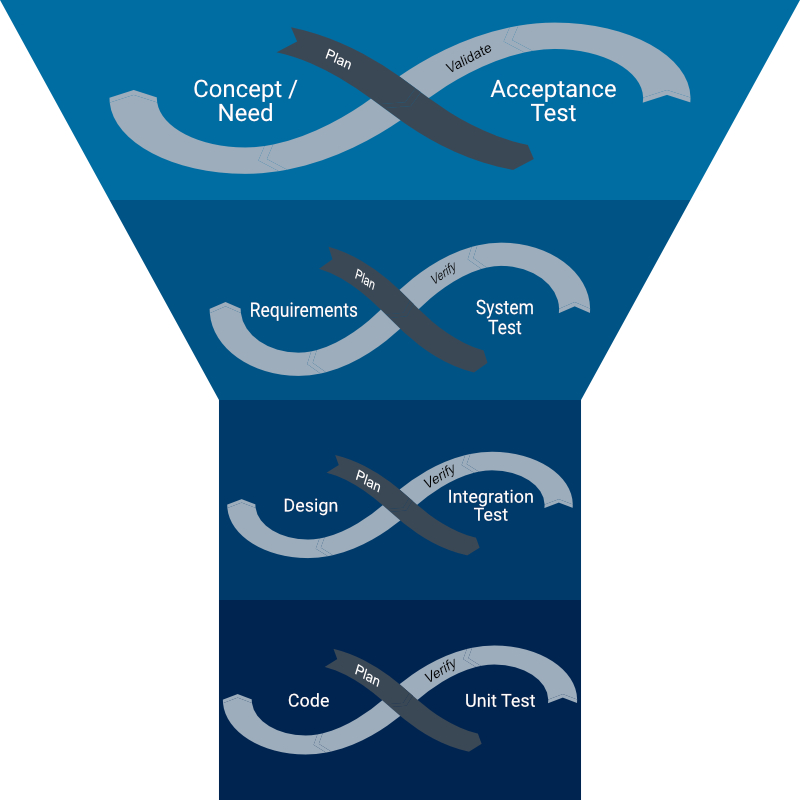

If we consider the version of the V-Model that I favor most (Figure 3), system testing is related to requirements. This means that in this view, system testing is verification that specified requirements have been met.

This is different from User Acceptance Testing (UAT), which validates user needs have been met, regardless of specifications.

Figure 3 – Representation of the V-Model with System Testing Verifying Requirements

Please keep in mind there are literally hundreds of versions of the V-Model that have been published since the paper, “A Comparison of Techniques for the Specification ff External System Behavior” by Alan M. Davis in 1988. The earliest version I have seen to date of this model was published by the U.K. Government in 1987 in a book called “The STARTS Guide”.

Unfortunately, the evolution of the V-Model has in many cases left out the idea of validation that occurs in UAT. The impact is that if there is a defect in the requirements, it will not be found until real-world use after release. Validation is the opportunity to test if the original need has been met, regardless of what is described in the specifications.

Why Perform System Testing?

System testing is the first opportunity to test all the major system components together as a whole. Even if all of the components are not in place, early system testing can start to give a picture of how things are shaping up.

System testing is also an opportunity to verify if requirements are being met at the big picture level. If not, there is still a chance to make corrections.

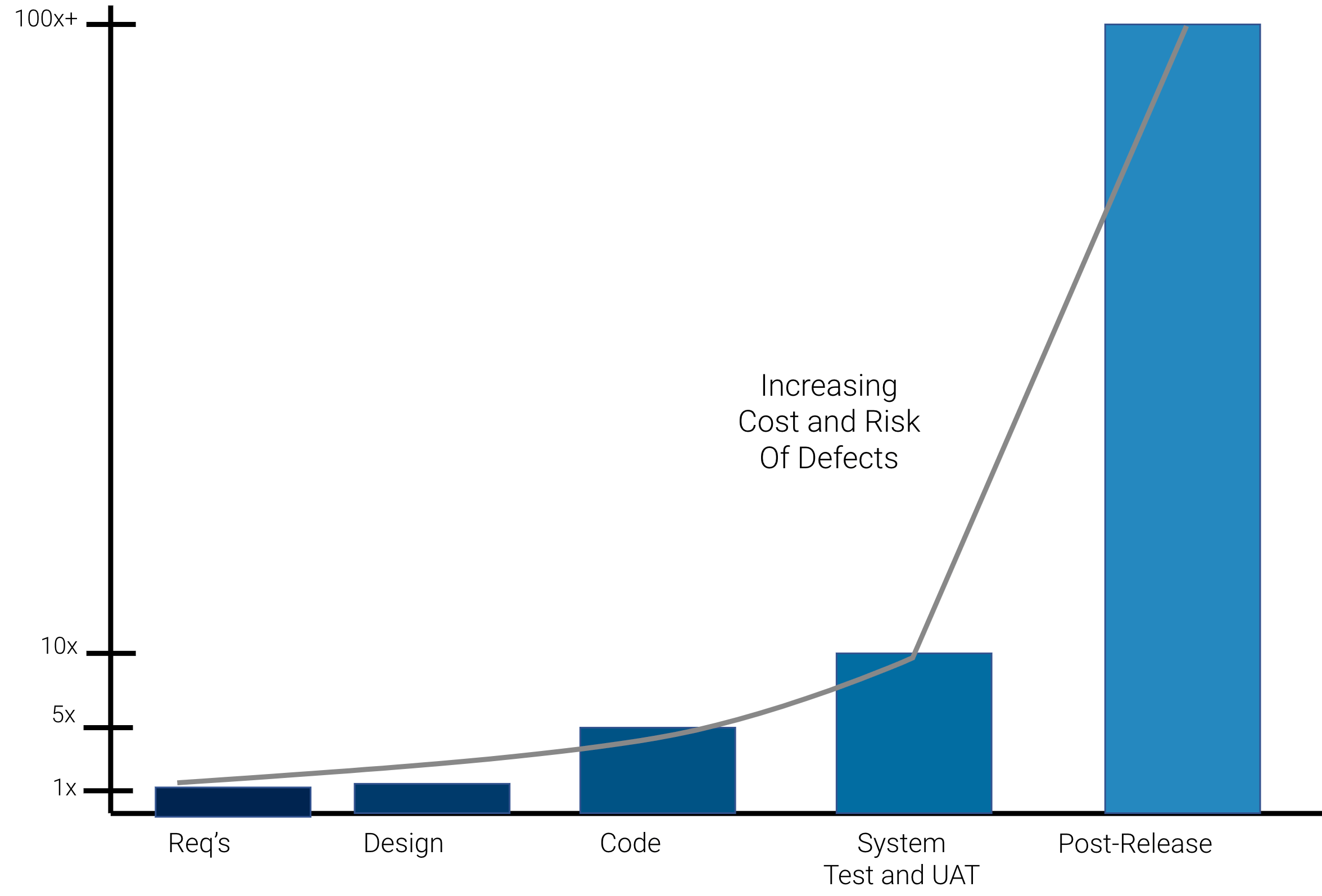

While system testing is typically an activity that occurs near the end of a project, the cost of finding and fixing defects is still lower than after release. Data across thousands of projects worldwide indicate that finding and fixing defects in system testing is about 5 to 10 times more than in requirements and specifications, but is much less than the 100 – 1,000 times multiplier seen after release (Figure 4). This is traditionally known as the “1:10:100 Rule”.

Figure 4 – The Relative Cost of Finding and Fixing Defects

It is important and interesting to note that this cost of late-stage defects has been measured and researched by many people over the last 50 years with not much change in the curve, even in agile lifecycles.

However, there has recently been a sharp increase in the cost of production defects. This is primarily due to the ancillary costs due to legal and regulatory costs, such as those imposed after a security incident. In essence, the collected metrics from over are showing we are in the territory of 5 and 6 figure defects costs at this point in time.

When Does System Testing Occur in Software Development?

System testing is a level of testing that occurs once enough of the system has been completed to accomplish a working version. The answer to “when” system testing should occur depends on your software development lifecycle (SDLC).

The practice of building huge monolithic systems which require years to complete is no longer common in software development. This is a good thing because those systems were prone to failure due to their complexity and large scope.

Today, the trend is to build systems consisting of smaller iterations of components that can grow into the eventual desired scope.

In just about all life cycles – sequential, iterative, and incremental, early simple tests to verify basic functionality and integration can be performed. These are known as “smoke testing” and “sanity tests”.

What is System Testing in Software Engineering?

Since system testing is very broad in scope, many roles and activities are often involved. For example:

- Software developers can contribute technical knowledge and help with performing technical tests such as performance testing

- Business users and business analysts can contribute business process knowledge and help with performing business-oriented tests such as end-to-end scenario testing

- Software testers can perform functional tests

- Usability specialists can contribute usability and user experience (UX) knowledge and help with performing or facilitating usability tests

System Testing in an Agile Context

One of the concerns in agile software development is that of testing at the system level. This is due to the incremental delivery nature of agile.

Consider that there are often multiple teams working on various feature sets, with delivery occurring at various times. Also consider that system testing encompasses more tests than functional testing, such as performance tests.

So, how does one perform system testing with multiple tracks of development?

One good practice I have seen work well is to have a separate test team responsible for testing the work of multiple teams before a major delivery is made (Figure 5).

The purpose of this system test is for interoperability, performance, and other types of testing that require the interaction of teams and the functions they create.

While this may go against some people’s ideas about agile development workflow and delivery, sometimes such a test is needed.

There is a danger, however, that a system test can turn into a bottleneck. One of the best preventative steps is to do as much non-functional and integrated testing as early as possible.

One important thing to note is that Continuous Integration (CI) is not sufficient or intended to replace functional integration and interoperability testing. Nor are CI tests the equivalent of regression tests. CI testing is intended to verify the stability of a build, not to fully verify or validate functionality.

Who Performs System Testing?

System testing is often performed by an independent test team either within an organization or external to the organization for Independent Verification and Validation (IV&V). You can learn more about how IV&V is performed by referring to IEEE Standard for System, Software, and Hardware Verification and Validation 1012-2016.

There may be times when system testing is performed as a larger team that includes developers, testers, operations personnel and business domain experts. This is a very effective way to involve needed stakeholders in testing.

Developers can play an important role in resolving technical problems, answering questions, and facilitating or performing technical tests such as performance testing.

Other specialists may be needed to perform tests such as security and usability testing.

Which Types of Tests Occur in System Testing?

When we consider that system testing is a level of testing, often driven by the scope of testing (a system) and the timing of the testing (project phase or release cycle), then we see that the types of testing can be many.

Functional tests, such as black-box tests, are often predominant in system testing. However, non-functional tests are also common and needed. These tests include performance, security, usability, interoperability, and other types of testing (Figure 6).

As stated earlier, it is very good practice to perform all of the types of testing that are applicable to your context as early as possible, such as in unit testing.

Referring once again to the FDA guidance, system testing comprises many types of testing:

“System-level testing demonstrates that all specified functionality exists and that the software product is trustworthy. This testing verifies the as-built program's functionality and performance with respect to the requirements for the software product as exhibited on the specified operating platform(s).

System-level software testing addresses functional concerns and the following

elements of a device's software that are related to the intended use(s):

· Performance issues (e.g., response times, reliability measurements);

· Responses to stress conditions, e.g., behavior under maximum load, continuous use;

· Operation of internal and external security features;

· Effectiveness of recovery procedures, including disaster recovery;

· Usability;

· Compatibility with other software products;

· Behavior in each of the defined hardware configurations; and

· Accuracy of documentation.”

To give a quick overview of the more common types of testing, here is a list with brief definitions and examples:

- Functional tests – Testing that the desired functionality is present and correct, such as the ability to perform a transaction from start to finish (end-to-end) correctly

- Reliability – Testing that the system can perform reliability over a period of time, and that the system can be depended upon to deliver correct functionality consistently. For example, does the system produce the same outcomes repeatedly time after time with no variation. Another example is to determine the mean time between failures (MTBF) when the system is being used over an extended period of time.

- Performance – Testing that the system can perform needed tasks within specific response timeframes, or to verify that the system can process a given amount of data in a given amount of time

- Security – Testing that the system has defenses and controls to prevent unauthorized transactions and access, and to protect the privacy of data, and that such defenses and controls are working effectively and efficiently.

- Usability – Testing the ease or difficulty that users may have in performing given tasks

- Interoperability – Testing the correctness of processing data after it has been transferred or received from another system or subsystem

Testing in a System of Systems Contex

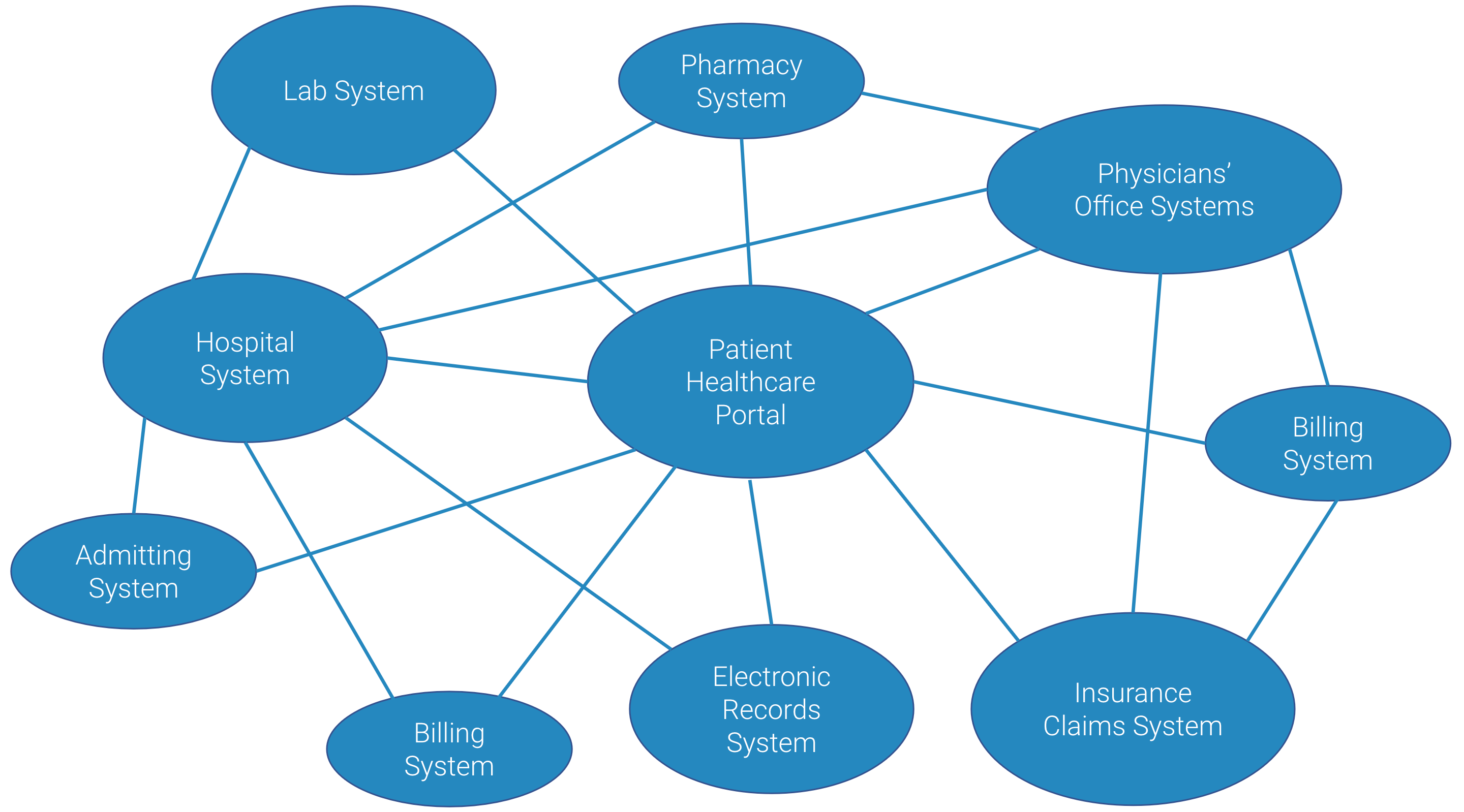

It is not uncommon to see system testing as a part of a larger technical ecosystem. In this context, known as a “system of systems”, it is vitally important to test system integration and interoperability with the other related systems.

An example of this kind of technical ecosystem can be seen in healthcare systems (Figure 7).

When you consider a system of systems such as shown above, the complexity and level of integration can be staggering. It is amazing that the individual systems and integrated systems work together at all!

When testing in a system of systems context, it is best to start with testing your primary system of concern first, then start to test integration and interoperability with the other related systems.

This does not mean that you must complete testing the primary system of concern before performing any system-level integration testing. The point is to determine the stability and readiness of your system before involving others, if possible.

In many cases, you may have limited access to the other systems. Therefore, you may likely need to coordinate your testing with those in other organizations. The planning may require a longer lead time than you might expect, mainly due to the co-ordination of schedules, tasks, and resource availability. This is a major reason why system test planning is needed.

Sub-system Testing

In many cases, a system is formed from multiple smaller sub-systems. Each of these subsystems can contain a related set of functionality. For example, an insurance administration system may have sub-systems for claims processing, policy administration, accounting, and so forth.

Each of the sub-systems could perhaps be seen as its own separate system, but in some cases such as legacy systems, sub-systems are kept combined within the larger system.

There are pros and cons to the sub-system approach. A key advantage is that each sub-system can be developed and tested in its own effort and combined into a system test later. A major downside is that the main system is often large and complex which makes it difficult to maintain and test for the life of the system.

Risks and Challenges of System Testing

Environments

System testing also assesses the system behavior in the intended operating environment. In some cases, this may be multiple environments. This can be a challenge as the ability to define and build a suitable system test environment is dependent upon the ability to produce or replicate the target operating environment(s).

Control

In system testing, there are many things going on at one time, or in a relatively close window of time. It is essential to be able to know what is being testing, the state of the environment, the version of the system under test (along with the versions of related items), the status of the test (especially as compared to the expected progress), and the level of defects at any given time. Configuration management (CM) is a critical activity to assure the integrity of the test.

People

As we have seen, system testing can and should involve people in a variety of roles. These people need to have the appropriate levels of skills and training, plus be able to work together as a team. Good leadership is needed to keep a system test on track.

Starting Too Early

Sometimes, the test manager is pressured to start system testing early, or perhaps before things are ready, despite the schedule. The fallacy is that some people believe that just because an activity has started, it will show progress.

In actuality, starting testing too early can generate too many early defects which flood the developers with defects to investigate and fix. This high early influx of defect reports can take away from valuable time needed to actually finish the development work. When this occurs, the risk of project failure is not only high, it is imminent.

Starting Too Late

One of the most common scenarios is when system testing is delayed due to delays in preceding activities. The temptation (and often times, reality) is that the time allocated to system testing is cut short to make a delivery deadline or reduce the lateness of delivery.

The reality is that just moving the finish line doesn’t help overall because the defects will eventually occur in live use (production) where they cost much more to fix.

As the old QAI motto says, “The bitterness of poor quality remains long after the sweetness of meeting the deadline has been met.”

Overcoming the Challenges

Environments – Physical test environments are the most expensive and troublesome way to go. Cloud environments, such as using Docker containers or other means to implement virtual environments, bring a new level of affordability, scalability and control.

Control - Have a system test plan and a good dashboard to track key metrics, then refer often to both. This can be accomplished using a test management platform like PractiTest. Adjustments will likely be needed based on what is being seen in test results, so it is important that the platform will be very customizable. For example, if few defects are being found, you might want to review your test cases to see if they are too weak. If too many defects are being found, it is a good sign the system is simply not ready for testing.

People – Take the time to assess your team’s strengths and weaknesses. Provide people with the training they need to perform the job effectively. Also, fill gaps with people who already have the skills needed. As a leader, mentor your team to grow into the roles needed.

Scheduling – Have entry and exit criteria which are appropriate to the risk and are supported by project management and senior management.

What is a System Test Plan?

A system test plan should contain:

- An overview of the test, including test objectives which forms the scope of testing

- An overview of the system to be tested

- The test approach, based on the test strategy

- The types of testing to be performed, based on risks and requirements

- Functions to be tested

- Functions not in the scope of testing

- Test schedules

- Test team members and their responsibilities

- Risks and contingencies

- How defects will be reported

- Entry and exit criteria (pass/fail criteria)

Why Have a System Test Plan?

Many organizations have gone away from the practice of having test plans. However, consider the list of topics above for a system test plan. Do you really want to make all this up as you go along?

The great value of a test plan is that you can give thought in advance of the test and be able to communicate the goals and scheduling of the test across the organization. In fact, you may need to communicate these details across organizations, as in the case of system-level integration testing.

Yes, it is true, as the quote which has been attributed to many generals, “No plan survives the first contact with the enemy.”

However, that should not keep up from defining a plan, even if you are in an agile SDLC.

Summary and Conclusion

System testing is an all-encompassing level of testing that often occurs toward the end of a software development cycle. However, the planning of system testing can start very early in system development as user requirements and other specifications are being developed.

System testing often includes multiple types of testing, performed by people in a variety of roles. The main goal of system testing is to verify that specified requirements have been met.

It is very helpful to have a system test plan to communicate the many details of system testing across the organization and to other organizations that may need to be involved.

And always remember that cutting system testing time is a way to meet the deadline, but the defects just move to a much more costly and risky time in production.

By Randall W. Rice

Last Update: 10/21/2020

Randall W. Rice, CTAL

Randall W. Rice is a leading author, speaker, consultant and practitioner in the field of software testing and software quality, with over 40 years of experience in building and testing software projects in a variety of domains, including defense, medical, financial and insurance.

You can read more at his website.