Introduction

At the simplest view of software testing is the concept of “If I do something, then I should see an expected result”. Or, if I don’t know exactly what to expect, then “If I do something, then what do I see as a result?”

This cause and effect nature of testing is known as functional testing. At the surface, it appears simple and easy – and it can be. However, in more complex situations, functional testing can be much more detailed and involved.

In this article, we will explore some of the deeper concepts of functional testing.

Table Of Contents

- What is Functional Testing

- The Basis of Functional Testing

- Functional Testing Examples

- Functional Testing Types

- Functional vs Non-Functional Testing

- How to Do Functional Testing?

- Functional Testing Best Practices

- How Do Functional Testing Tools Work?

- Conclusion

What is Functional Testing?

Functional testing is based on exercising functions in software that can show distinct outcomes when certain stimuli are introduced or performed. This is where the cause and effect nature of the functional testing can most often be seen. (Fig. 1)

Fig. 1 – The Cause and Effect Nature of Functional Testing

Functional testing can be seen in many contexts. While we tend to think of most software as being event-driven, such as in Graphical User Interfaces (GUIs), or just UI, there are other contexts such as batch processing and embedded software which also contain functionality that is controlled by varying input or stimuli.

One might think of functional testing as taking a snapshot picture with the image captured being what occurs at one instant when one input is performed.

Another very common way to describe functional testing is “black box” testing. In black box testing, the idea is that you have no knowledge of how the item you are testing works from an internal perspective (such as knowing how the code is written). All you know is that if you do something from the external, like clicking on a button, something will happen.

In this article we will cover many terms and try to explain each one. However, if you need further definition of terms, you can visit the Software Testing Glossary.

The Basis of Functional Testing

Functional tests are often based on the various conditions as described in formal specifications, such as user requirements, use cases and models. Other basis of testing might include user stories, system documentation and user experience.

If written correctly, the basis of testing should contain specific instructions on what the system or application should do in certain circumstances. These instructions can then be the basis of test conditions, which are the key element of functional test cases.

A test condition is simply an action, state of behavior, event, or similar stimuli which should result in an observable result. For example, as an ATM user, if I choose to withdraw money from my checking account, the money should come from checking and no other account. The amount withdrawn should be debited from checking and no other account. The correct amount of the transaction should be recorded and debited.

Functional Testing Examples

To give more clarity to the definition of functional testing, consider the following examples. These are just a few ways functional testing can be seen.

- Testing features on a web page, such as searches, menus, links, and so forth

- Performing end to end system tests in which you test a series of functions that are performed to complete a major functional task, such as ordering a product on a website as a new customer.

- Performing User Acceptance Testing (UAT) – The main concern is if the application or system does what the user needs it to do in performing their daily work.

Developers can also perform functional testing of the code they create and maintain. However, if the tests are based on the structure of the code, they would be considered structural tests, not functional tests. Both structural and functional testing are needed to get a complete view of software integrity, behavior and functionality.

Features of Functional Testing

Functional testing is often associated with specific features or functionality in a software application or system. These features may be fully implemented so that the tester can try them before actually designing and performing a test, or they may just exist in specification form.

Functional testing starts to get more complex and involved when you start to perform multiple related functions together. But this is also when functional testing starts to go below the surface of the application where defects can be more deeply embedded in the code.

Functional testing often exhibits the following features:

- Simple to understand at first (cause/effect)

- Becomes more complex when testing functions in combination with other functions (such as seen in decision tables)

- Can be done at depth by using techniques such as boundary value analysis and state transition testing

- Can range from very informal (such as exploratory testing) to very formal using formal test design methods and detailed test cases and test procedures.

- Can be automated in many cases

Functional Testing Types

As in many software testing activities, functional testing can be seen in various types. The idea of a testing type is that it is aimed at a particular target, objective or risk.

For example, some functional tests are confirmatory. These tests are intended to confirm that the functionality is working correctly. However, a confirmatory test does not try to find what is not working correctly. So, to get better coverage in testing, other tests are needed to perform what is often called “negative tests”. Confirmatory tests are often called “positive tests”

Here are some common functional test types:

Confirmation Tests

As explained above, these tests confirm correct functionality. These tests are often done after a change is performed to make sure the change was implemented correctly. However, confirmation tests can be performed at any time.

Regression Tests

These tests are typically a subset of other functional tests, with the goal of making sure something that used to work still works correctly. Like confirmation testing, regression testing is often done after changes have been made. However, since external factors can affect software functionality, some people automate regression tests and run them in a continual manner.

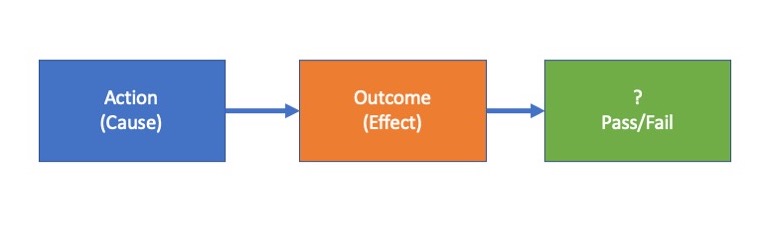

Equivalence Partitioning (EP) Tests

These tests are designed to sample software functionality by testing just one or a few conditions from a larger set of conditions, all of which have equivalent functional outcomes. It is important to note that your EP tests should include invalid conditions if those partitions exist.

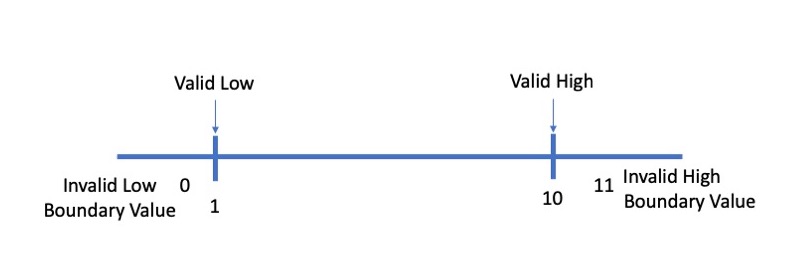

For example, you may be testing values for a field where 1 through 10 are the only valid entries. You would want to consider if 0 could be input, as well as negative numbers. Invalid high values should also be considered, as well as alpha characters, special characters, spaces, etc. (Fig 2)

Fig. 2 – Equivalence Partitions

Boundary Value Analysis (BVA) Tests

These tests are designed to test software at the edges or boundaries where behavior changes. These tests can reveal defects where the logic has been incorrectly coded, or a business rule perhaps is not correctly understood. As in the previous example, where you may be testing values for a field where 1 through 10, you would want to test 0,1,10 and 11 if applying the “2-value” method (Fig 3). The “3-value” method would test 0,1,2,9,10, and 11. (Fig 4)

Fig. 3 – BVA Using the 2-Value Method

Fig. 4 – BVA Using the 3-Value Method

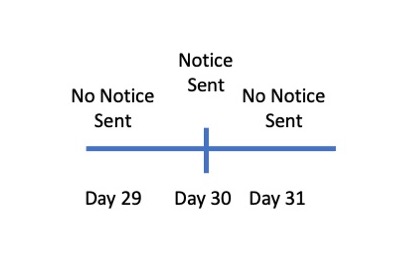

One might look at the 3-value conditions and think they are redundant – and they could be. But consider a single threshold situation where at a certain value, something is supposed to occur. For example, a late payment due notice is sent 30 days after the payment was due. (Fig. 5) You would want to test that no notice is sent on day 30, a notice is sent on day 30, and no notice is sent on day 31. To fully test this example, you would really need the 3-value approach.

Fig. 5 – The 3-Value BVA Method Used in a Single Threshold Situation

One final point about BVA is that it finds one type of defect – when an invalid relational operator is used, either in the code or specification. For example, perhaps the rule should read “>=” instead of “>”.

Decision Table Tests

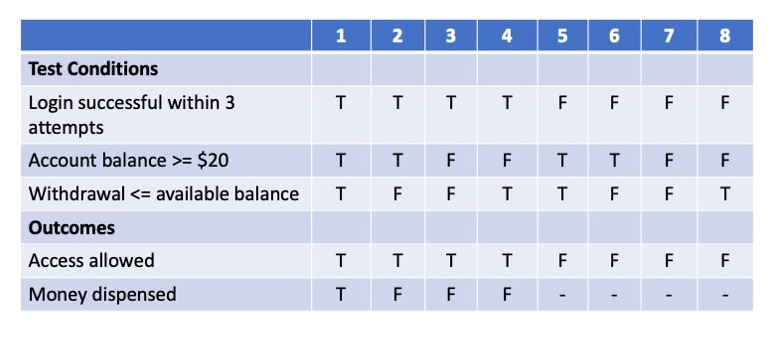

These tests are based on combining related logical decisions together as they are constructed in a decision table (Fig. 6). These tests are very valuable whenever you understand at least one of the logical rules. From there, you can logically define the others. Each column represents a test case.

In this example, suppose we have a business requirement that states, “If a customer successfully logs in to the ATM with a correct card and PIN, has an available account balance is greater than or equal to $20, and the withdrawal amount is less than or equal to the available balance, money is dispensed according to the amount requested.”

Although this is not an overly complex requirement, there are three conditions involved which can make the requirement a bit difficult to parse and understand. Note that in the decision table we start with the happy path first where all conditions are true. Once we express that rule, then we start to vary the conditions between true and false.

Also note that if the user access cannot be obtained to the ATM, the other conditions become untestable. These are indicated by a dash in the table. Sometimes these illogical or untestable conditions may be combined to reduce the table size. However, in other cases, these illogical test condition combinations may make good negative tests.

Fig. 6 – Simple Decision Table

State Transition Tests

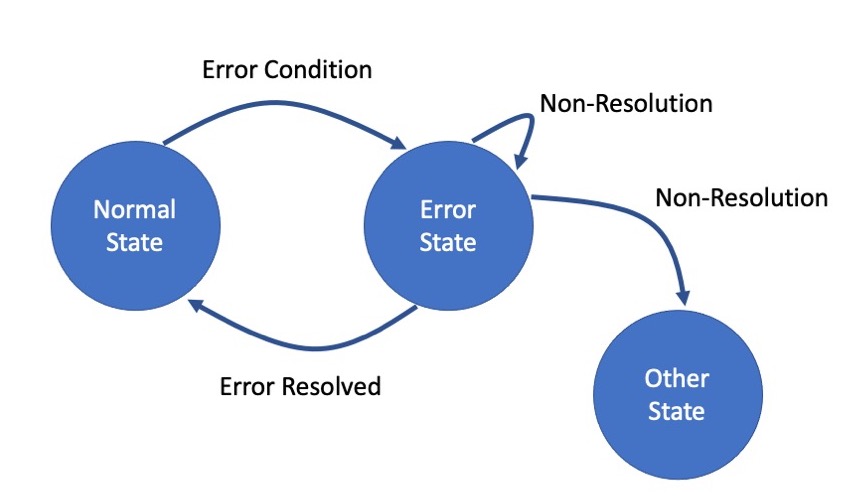

These tests are based on the concept that at any given point in time, an application or system is in one and only one state of behavior or operation. The simplest example is the normal state vs. error state. The things you are able to do in the normal state may no longer be possible to do in the error state because the error state must be resolved first.

State transition testing is often based on a model which shows all states and all transitions (Fig. 7). The minimum coverage goal is to cover each state and each single transition at least once.

In Figure 7, note that there is a possible “Other State” shown, with no transition to any other state. This is to show the possibility of being in an error state and causing a condition that does not resolve the issue, but throws the application into a state where the outcome is unknown.

This is a common scenario that allows an attacker to take advantage of error states to gain access to a system or application.

Fig. 7 – Sample State-Transition Diagram

Functional vs Non-Functional Testing

You might be asking, “What about performance testing, usability testing, and so forth?” Yes, those are also test types, but are considered non-functional tests.

Non-functional testing is a bit confusing to understand because in many cases, you are still testing functionality, but just testing multiple functions over an extended period of time to verify or validate a particular attribute or characteristic of software. These software attributes can be found defined in ISO/IEC standard 25010, which breaks them down further into sub-attributes.

I prefer to use the terms “attribute testing” or “characteristic testing” as opposed to “non-functional testing” to avoid this confusion.

One way to think of software and systems in general is to consider:

What should the application do?

What should the application be?

The “do” side of the picture is the functional perspective, which can be tested by functional tests.

The “be” side of the picture is the attribute or behavior perspective, which can be tested by “non-functional” or attribute/characteristic tests.

A good example of non-functional testing is performance testing. You can’t test performance of software or of a system by just performing one function. You must perform a functional process with certain amounts of load over a certain period of time. This kind of test is very dynamic and in contrast to snapshot mode, resembles more of a movie mode where you are watching the test play out over time.

How to Do Functional Testing

The exact process of performing functional testing will vary depending on your test strategy and approach. For example, some organizations may have a very defined and structured test approach while others may use an exploratory approach.

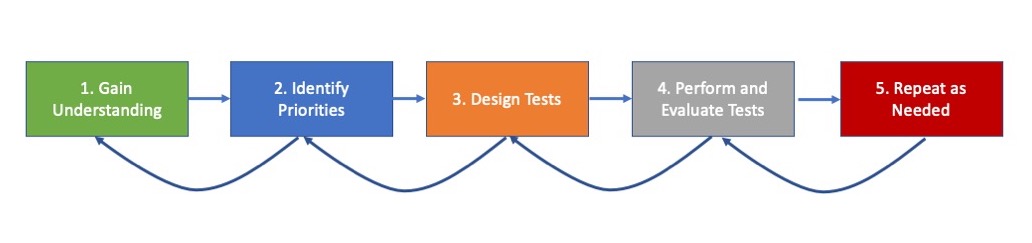

With that understanding, here is a basic flow of functional testing. This is not necessarily a linear process, as it is not unusual to iterate around some steps. For example, you may learn in performing tests that more tests are needed. So, you may need to revisit the test design step. Or, if you are running short on time, you may need to re-prioritize tests during the test and evaluation step. (Fig. 8)

Fig. 8 – The Iterative Flow of Functional Testing

In Figure 8, the arrows just go back one step, but in reality, any step can be repeated at any time.

Step 1 – Gain Understanding of the Item to be Tested

This understanding may come from documentation, requirements, user stories or experience. The main concept is that in order to fully test something, you need to learn something about it, especially, what is expected behavior.

Step 2 – Identify Priorities

This is an essential step to focus on the most important items to test first. This determination can be based on relative risk, criticality, business need, or other criteria. It’s important to note that it is common to test the same item at various levels of priority with varying levels of test rigor.

For example, you may want to first do smoke testing or sanity testing (see link), then proceed to more rigorous tests like boundary value tests once you have proven the software works at a basic functional level. Otherwise, you may spend a lot of time doing detailed rigorous testing on something that doesn’t even work at a basic level.

Step 3 – Design Tests

If you are performing basic functional tests, you may be able to get by with informal tests without a lot of design or definition. But, if you want to go deeper in testing where functions interact a lot, then you will want to take the time to design tests that work together in a way that can find some of those deeply embedded defects.

“Single condition tests” are focused on a single test condition and the observable outcome. These are usually easy to design, perform and evaluate, but they can lead to large collections of test cases that are simple in nature.

To get more efficiency in testing and to find the more elusive defects, combining multiple test conditions in the same test case is often needed.

As an example, instead of just testing a new customer ordering a product, consider a new customer in another country ordering over $10,000 in products and paying by electronic funds transfer.

Another benefit of designed and documented tests is repeatability and consistency. This becomes especially important in regression testing.

Step 4 – Perform and Evaluate the Tests

In this step, you either perform pre-designed tests or exploratory tests. Both are functional in nature.

As you perform the functional tests, you are able to evaluate the outcomes and determine if the software is working correctly or not. You may also discover the need to design and create more tests, or to explore the application in a more focused way.

This leads to three important facts about test performance and evaluation:

- They are often done at the same time

- You may need to adjust your tests based on what you are seeing

- You will likely need to repeat your tests in the event you need to retest a bug fix, or for the purpose of regression testing. This implies the fifth step.

Step 5 – Repeat all the above as needed

Repeating tests, even during a development/test/release cycle, is common practice. The amount of repetition depends on the degree of change encountered during the cycle, the number of defects found, fixed, and those remaining to be found.

The number of defects found and fixed are knowable. However, the number of remaining defects is unknowable. Defect prediction models can be used, but even then, we cannot with certainty know when all defects have been found. In fact, exhaustive testing is impossible due to the high numbers of possible test condition combinations.

This brings us to an interesting and important question, “When do I stop testing?” There are real-world resource answers, such as “When the deadline arrives” to “When the money runs out” but the problem is that defects can remain regardless of our risk assessment, resource levels and so forth.

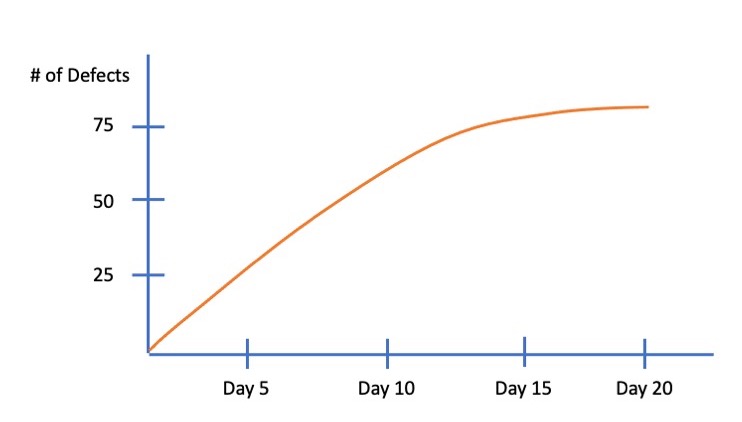

Perhaps a better answer can be found in the analysis of return on investment (ROI) and the idea of diminishing returns. For example, if two weeks of testing yields thirty defects, that is not a bad yield. However, if the next week of testing with the same level of effort yields only three new defects, we have probably reached the best level of defect discovery possible with the people, tests, tools and resources available. (Fig. 9)

Fig. 9– Diminishing Returns on Testing

Functional Testing Best Practices

Anytime “best practices” are discussed, it is important to consider that they must be understood in context with your situation (people, processes, tools and the items to be tested). With that stated, let’s look at a few practices that are common and, in many cases, good and perhaps “best” practice.

- Know your application and its behavior.

- Start with simple test conditions and the vary those conditions to achieve more coverage. Keep in mind that it is in the varying and combining test conditions that you find defects.

- Get off the “happy path” of using the application. Yes, for sure the application needs to be tested for correct behavior. But, if you don’t test the alternate ways people might perform a task, as well as trying to invoke error conditions, your test effectiveness will likely be low.

-

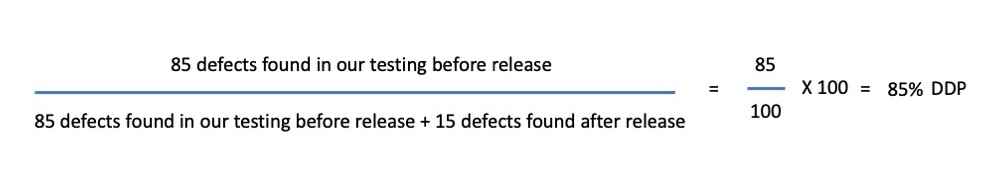

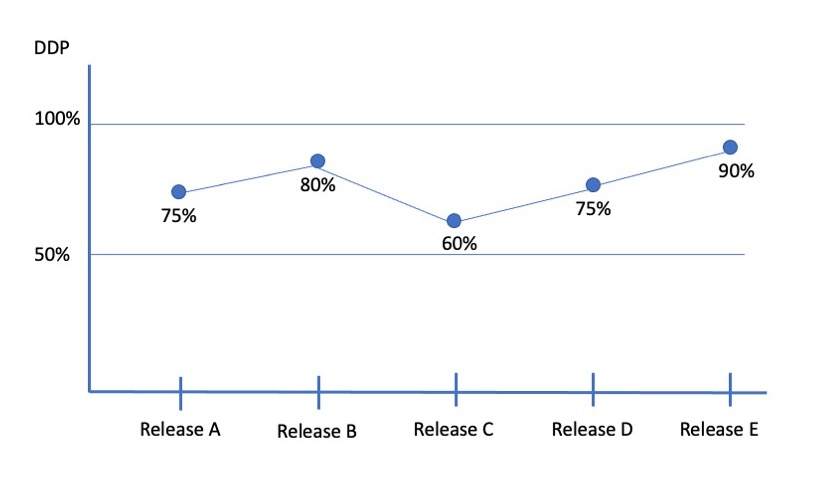

Measure your testing effectiveness. One of the best ways I know is to start measuring Defect Detection Percentage. This is a fairly easy metric to obtain.

Count the total number of defects found by all internal testing in a release and divide that by all defects found in the release both internally and by users and customers after release. (Fig 10). The value of DDP is that you can see over a series of releases how your relative quality is either improving or regressing. (Fig. 11)

- Apply the functional test design techniques covered above (BVA, decision tables, state-transition) in this article to achieve negative testing.

- Make your testing efficient in terms of the numbers of tests performed. Remember that each test case must be performed, evaluated and maintained. In this context, having thousands of test cases could actually be a liability if they are simply single-condition cases, or if they have a level of redundancy to them.

- Try to combine smaller test cases into larger test scenarios. This perspective is often missed in functional testing and is why users and customers tend to find defects in live use. Testers fail to test end-to-end scenarios, but this is where you also find the integration defects.

-

Consider test automation. This can take the labor, tedium and boredom out of manual functional testing and free your time to test the more complex and human-intensive functions manually.

Fig. 10 – Defect Detection Percentage Calculation

Fig. 11 – DDP Over Time to Show the Relative Quality of Releases

Who performs functional testing?

The most common answer to this question is “independent testers”. By that, I mean testers who are not part of the development process. They may be internal or external to the organization. The value-added proposition is that independence often brings more objectivity to the testing process.

However, in reality, everyone on the project can perform functional testing. This includes, developers, business analysts, and end-users (such as in User Acceptance Testing). Software testing should be a shared activity.

When functional testing is shared across roles, you get a variety of important perspectives, plus the ability to find defects sooner when they have the least cost and impact. When functional testing rests with one team, all the weight of testing falls there as well. If the testers miss a defect, it often is seen in real-life production use.

Can you automate functional testing?

Yes, in fact, functional testing is where test automation can really show value. But, while it is easy to say, “just automate it”, it is often much more involved than that. It takes special skills and experience to actually find the right automation approach and tools, then to effectively implement them.

The big goal in test automation is to perform functional tests in an unattended, repeatable way. However, this is often an elusive goal for many reasons. One of the main reasons is that the application under test is often subject to change. These changes can break the automation, even when object locators are used. There are many other reasons as well, such as the lack of resources to create and maintain the automation, tools which go out of business or support, lack of a good tool to manage the tests, and so forth.

How Do Functional Testing Tools Work?

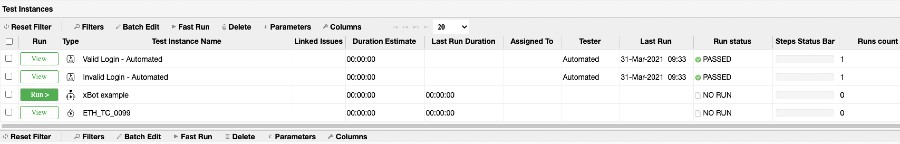

First, we must distinguish between test tools in general and test automation tools. Not all test tools are for test automation. Examples include test management tools, defect tracking tools, test case generation tools, and so forth. However, test automation tools rely heavily on other tools such as PractiTest for managing, controlling and measuring tests.

Functional test automation scripting is typically achieved in three ways:

- Using a capture/playback (or record/playback) approach where the tool is recording in background as you perform the test. When the test is complete, you can replay the same test using the same data against the same software or a new version of the software. In reality, the replay of the test often requires some modification of the recorded test script to deal with errors and other conditions. A popular and free example is Selenium IDE.

- Writing tests in scripting languages, or even pure coding languages. This is a more laborious approach, but also more robust in the tests that can be created and performed. Selenium WebDriver is one example. An example of the pure coding approach would be writing a test in JavaScript that could be executed against a web application.

- Using a code-less test tool that allows you to create a test by dragging and dropping various objects and actions into an interface. These tools have been around for years and in many cases, some level of code-level editing is still needed to handle unique and exceptional conditions.

It should also be noted that these scripting techniques are applied depending on the test tool being used, as well as the test automation architecture in use. For example, you might choose to use a scripting language with a data-driven approach within a keyword-based test automation architecture. All of these techniques and frameworks are highly functional in nature.

In the example below from PractiTest, we see how automated functional tests can be managed, executed and measured. (Fig .12)

Fig. 12 – Functional Automated Tests in PractiTest

It is very important to have a tool to manage your functional test cases. Many people start out using spreadsheets to manage test cases but soon learn that they are very cumbersome to maintain and lack the features needed to track and measure test execution. Test management tools, and testing tools in general, can assist greatly with that.

What About Scope and Complexity?

When learning something like functional testing, it is best to start small and simple, then grow. In fact, as mentioned earlier the real challenge and power of functional testing is seen in the combining of test conditions.

For example, Enterprise Resource Planning (ERP) applications are often large and complex with massive functionality. The vendor can perform their testing, but it is up to the customer to perform acceptance testing, which can be both functional and non-functional. It is common to use techniques such as risk-based testing, along with robust test tools to plan, perform and evaluate the testing of large and complex systems.

Conclusion

Functional testing is the essence and core of software testing. At first, the simple cause/effect nature of functional testing seems almost too easy. It is when we face the complex functionality and business rules that functional testing gets more involved and requires test design techniques such as boundary-value analysis to cover both positive and negative conditions. Techniques such as equivalence partitioning and decision tables can help reduce the number of tests in a logical way without losing test coverage.

Test management tools such as PractiTest are needed to effectively manage, control and measure test execution, as well as test case development. Test management tools are also needed when introducing test automation, with tool integration being a very important consideration.

Hopefully, this article has helped to provide you with a deeper dive into functional testing and a way to deal with some of the opportunities and challenges that come with the territory.