Smoke testing in the practice of software development and software testing has become a commonly used technique for the early and continuous detection of software defects. There are many ways that smoke testing can be applied in software applications and systems. In this article, we explore some interesting history of how the term “smoke testing” originated, and then use that history as an analogy for finding defects in software and systems. We also show examples of how smoke testing can be designed and performed in a variety of contexts.

Introduction

Smoke testing is sometimes called “sanity testing”, “build verification testing” or “BVT”. The purpose of smoke testing is to find defects in software at a very basic level of functionality early after some form of integration. The reality is that most test case libraries evolve over an extended period of time. The exception to that practice may be seen in new system development when there is enough time to plan a set of tests that achieve a given level of functional coverage.

A good starting point for building a more robust and complete set of tests is a set of simple smoke tests.

To deal with rapid build and release cycles seen today, especially in Agile and DevOps, smoke tests are a helpful way to know quickly if a change has introduced a new defect that degrades the integrity of the software. However, smoke tests can be performed in other contexts than Continuous Integration, such as traditional integration testing.

While regression tests can also help identify new unintentional defects, it is not common to have tests for new functionality in a regression test suite. Typically, regression tests cover baseline functions and have more conditions than smoke tests.

In this article we will look at the shared territory between smoke tests and regression tests, as well as the contrasts between these confirmatory tests.

Table Of Contents

- What is Smoke Testing? A working definition

- The Value of Early Incremental Tests

- How to Design Smoke Tests?

- How to Implement Smoke Tests?

- How to Perform Smoke Tests?

- How to Report and Assess the Results of Smoke Testing?

- Conclusion

What is Smoke Testing? A Working Definition

You won’t find the term “smoke testing” in the primary international standard for software testing, ISO 29119-1. However, there have been some definitions set forth in other places. Here are two notable ones.

“A test suite that covers the main functionality of a component or system to determine whether it works properly before planned testing begins. Synonyms: confidence test, sanity test, intake test” - ISTQB Glossary

“Smoke testing, in the context of software development, is a series of test cases that are run before the commencement of more rigorous tests. The goal of smoke testing is to verify that an application's main features work properly. A smoke test suite can be automated or a combination of manual and automated testing.” - “Smoke testing, in the context of software development, is a series of test cases that are run before the commencement of more rigorous tests. The goal of smoke testing is to verify that an application's main features work properly. A smoke test suite can be automated or a combination of manual and automated testing.” - Techopedia

We can see immediately in both of these definitions that smoke testing is typically not achieved in a single test case, but rather in a collection of tests. This collection of tests may vary in number, depending on the item being tested, but the goal remains the same – to find basic defects early before other work occurs on the item.

A Little History

Smoke testing in software makes more sense when we see how the term originated.

For many years, dating back to the mid-1800’s, plumbers and civil engineers have used the practice of injecting smoke into pipes, sewers and other conduits where defects might be deadly – as in the case of natural gas leaks. Another example is how a plumber might inject smoke into a plumbing system to find leaks without the risk of water damage to walls.

Since those early days, smoke testing has been used in a variety of contexts, including automotive repair, heating and cooling systems, and now, software applications.

So, when software developers and testers were looking for a way to describe a very basic level of testing to make sure if things work together, smoke testing was an appropriate term.

One More Analogy

When I teach the concept of scenario-based testing, I often use the plumbing analogy.

Imagine you are buying or renting a home or apartment. One thing you want to know before you sign the purchase or rental agreement is the condition of the plumbing.

If there is no water turned on to the dwelling, you will not be able to tell very much at all. It is only when water is flowing through the pipes that you can detect leaks, inadequate water pressure, hot and cold water not working correctly, any smell or discoloration to the water, and so forth.

In this analogy, the pipes represent the functional process being tested. The water represents the data that flows through the pipes. And, the faucets represent the controls often seen in systems to select or restrict certain functions.

If we were to substitute smoke for water in this analogy, you have the essence of smoke testing. It’s a cheap, quick and easy way to at least find the leaks – even if you would not be able to assess the other aspects of the plumbing system.

It is interesting to note that defects and failures in physical structures often occur where components fit together, or fail to fit properly. In software, we see the same effect where integration occurs. In addition, we can also apply the concept of smoke testing to basic functional tests.

For example, in testing APIs, failures are often seen due to very basic defects. When tested early using smoke tests, APIs can be verified and validated to help achieve solid integration. Other smoke tests can validate functionality not directly impacted by integration.

The Value of Early Incremental Tests

While a complete set of robust tests is often seen as the goal in software testing, there is a risk when these tests are performed in a burst of testing activity. The risk is that so many failures can be found so quickly that the developers are overwhelmed and unable to fix the defects quickly enough to keep up with continued testing and re-testing.

The end result is that a project may fail under the weight of too many defects found in a short period, especially toward the end of a project.

Plus, when everything in an integrated system is tested at one time, it is harder to isolate exactly where the defect might be and how it might have been introduced.

Early and simple tests can help avoid these risks while at the same time reducing the cost of fixing the defects. Since defects can cause a ripple effect in software, a defect found and fixed early is a good thing.

How to Design Smoke Tests?

A collection of smoke tests typically evolves over time. The big question is, “What should be included in the set of smoke tests?”

Since testing software integration is a key goal of smoke testing, a scenario-focused approach can be very effective. The first step in a scenario-focused approach is to define the most critical tasks performed in a system or application.

Let’s take an online banking mobile app as an example. There could be many possible user functions, but some of the most critical tasks might be:

- User logins and checks account balance, then user logs out

- User logins and checks recent transactions, then exits the application without signing out

- User login fails on incorrect password/username combination

- User gets locked out for a period of time due to excessive incorrect login attempts

- User logins and makes an online deposit

- User logins and makes a transfer between checking and saving accounts

These tests are very confirmatory in nature, with the main objective of making sure the functions under test are working correctly and do not “break the build”. These tests are not designed to test variations of conditions, such as boundary value tests.

Smoke tests are similar in nature to regression tests, but there are three major differences:

- Smoke tests are often performed in conjunction with a new build or commit.

- Regression tests typically have more coverage of functional conditions.

- Regression tests are often performed prior to a release or in an ongoing monitoring cadence after a release.

A regression test might include both true and false sides of a business rule, user story or requirement.

For example, if a business rule states, “If a customer has preferred status, apply a 10% discount.” A smoke test would include a test for the preferred status, but might not have a test for non-preferred status. A regression test might cover both preferred and non-preferred conditions.

Even more rigorous tests would be those that cover extreme conditions, such as a customer who has had preferred status in the past, but that status expired the day before an order is placed. This case could perhaps be a smoke test or regression test, but could also be an edge case found in a larger set of functional tests.

How to Implement Smoke Tests?

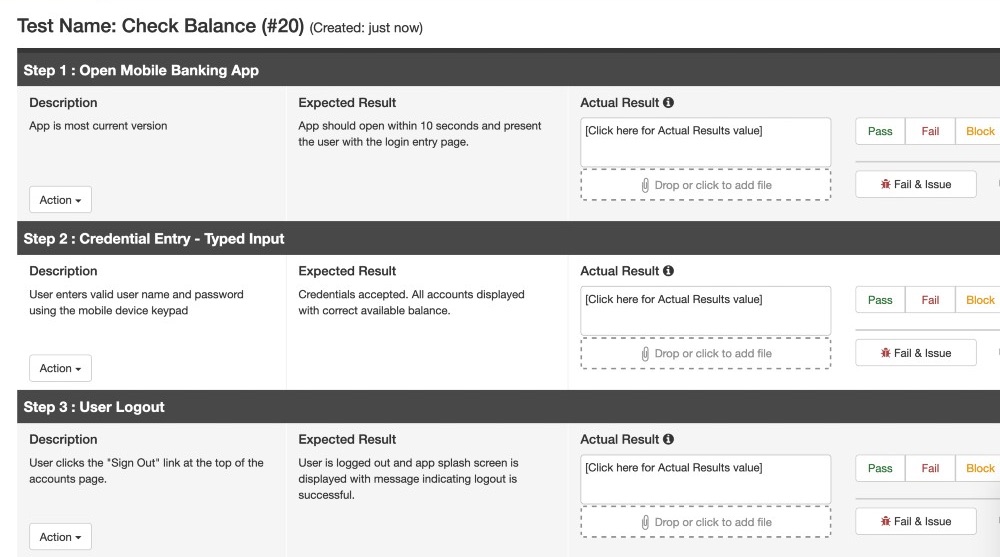

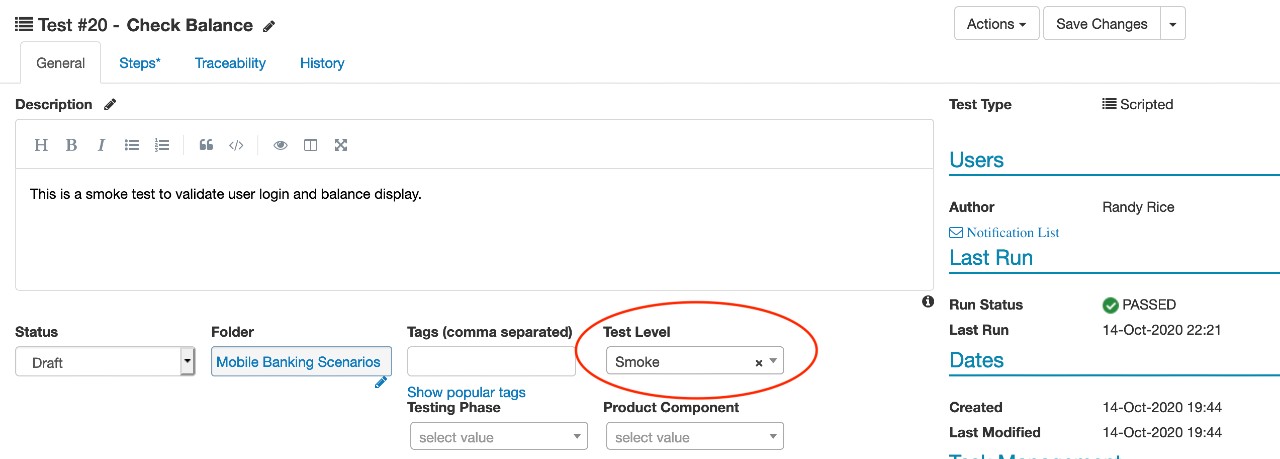

Taking the example of the first mobile banking app test, let’s see how a sample smoke test case look as implemented in PractiTest.

Figure 1 – Smoke Test Definition in PractiTest

In Figure 1, we see the steps involved in a basic smoke test to login to a mobile banking app, look at account balances on the main page of the app, then sign out of the app.

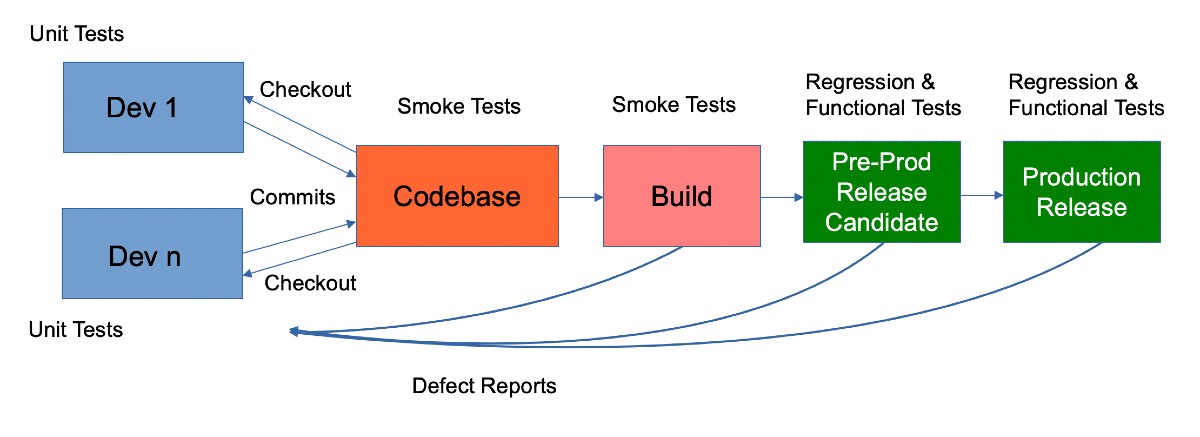

Figure 2 – Categorizing Smoke Tests

It is good practice to categorize your tests. In Figure 2, we see the Test Level of the :Check Balance” test defined as “Smoke”. This field is customizable, so you can use whichever wording you desire. In fact, you can define other test levels, such as “Regression”, “Acceptance”, and so forth.

Also, note in Figure 2 that you can see the status of this particular smoke test at the right side of the display

How to Perform Smoke Tests?

Smoke tests can be manual or automated, but to be sustainable, they are often part of a Continuous Integration (CI) or BVT test suite that is performed in an automated way each time a new build is created. The goal of these smoke tests is to ensure that the build has basic integrity.

However, smoke tests can be performed in many contexts, including traditional waterfall life cycles. In traditional life cycles, smoke tests are typically performed as a preliminary integration test. In a traditional development context, builds may not be defined as such. Rather, development and testing may occur in a way that integrates units into related sets of functionality, but not assembled into a build.

In the build concept, it is important to understand that build integrity is not the same as correct functionality. Functional correctness is assessed through the performance of functional tests that are designed to test new functionality and regression tests to ensure existing functionality still works correctly.

In some cases, smoke tests can be performed after a major commit.

In Figure 3, we see one example of how smoke tests fit into the flow of a Continuous Integration (CI) and Continuous Delivery (CD) pipeline. Note that smoke tests are shown here as being part of the build process, with unit tests part of development, and other tests such as regression and confirmation tests as part of pre-deployment tests.

In this view, we can see a clear distinction between smoke tests and other types of testing. Immediately after each build is created, a suite of smoke tests, or build verification tests (BVT) are performed and evaluated. In a CI flow, this is an automated process to keep pace with daily or even more frequent builds.

Also, in Figure 3 we also see regression and confirmation tests after release. This is because some organizations have found it helpful to perform such tests to detect when unknown or external factors may cause unexpected failures.

Figure 3 – Smoke Tests in the CI/CD Pipeline

There are other certainly other ways to perform smoke tests and other contexts in which smoke testing may be applicable. For example, in a classic or hybrid waterfall lifecycle, smoke tests might be performed as part of integration or system testing.

In addition, while automation is a very attractive way to implement and perform smoke tests, the reality is that many people still conduct a lot of manual tests. This is due to many factors, some of which are quite challenging to overcome.

How to Report and Assess the Results of Smoke Testing

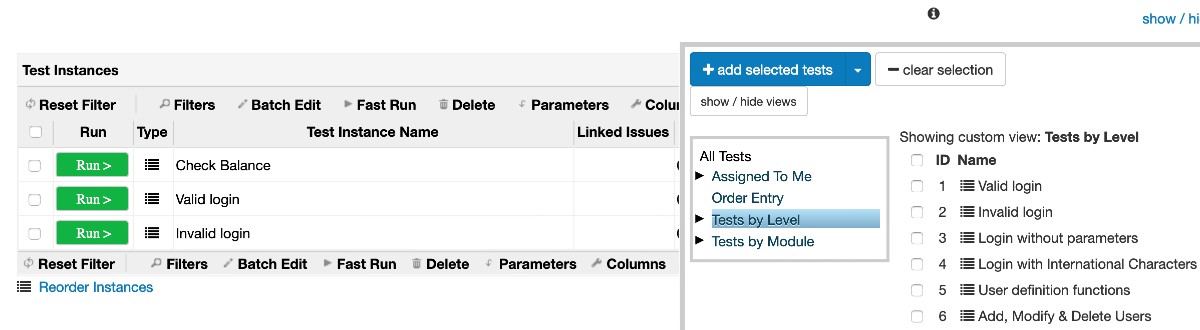

In PractiTest, you can initiate the run of smoke tests, either in manual or automated ways. In Figure 4, you can see an example of smoke tests that are ready to run.

Figure 4 – Smoke Tests Ready to Run

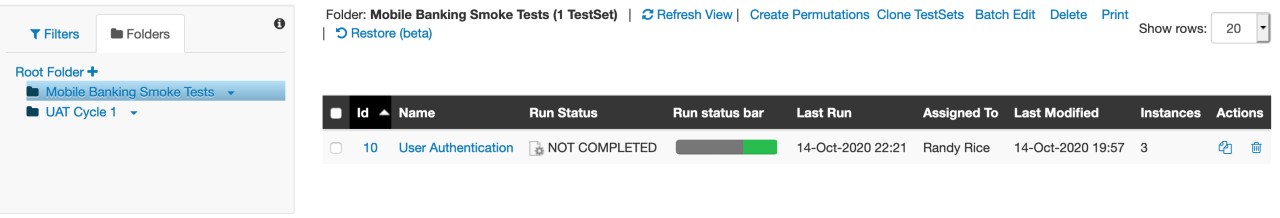

Smoke test results have the unique requirement of rapid reporting. When a new build fails when smoke tests are performed, the failures must be reported and fixed immediately. In Figure 5, we see the status of smoke tests that are being performed as shown in PractiTest.

Figure 5 – Status of Smoke Tests

Conclusion

Smoke tests can be informal, but are often more commonly seen as an ever-growing set of defined simple tests that continually verify the integrity of an ever-changing software application or system.

While no particular lifecycle is required for smoke tests, perhaps the most common context today is a CI/CD pipeline, such as seen in DevOps or agile.

Also, smoke tests are most efficiently and sustainably performed as automated tests, but can be performed manually.

The great thing about smoke tests is the simple nature in which they can be defined and performed. The bar of entry is not high and value obtained can be great!

Regardless of how you perform smoke tests, the main thing is that you keep them simple, understand their purpose and perform them early and often throughout software development, test and release cycles.

By Randall W. Rice

Published on:

Randall W. Rice, CTAL

Randall W. Rice is a leading author, speaker, consultant and practitioner in the field of software testing and software quality, with over 40 years of experience in building and testing software projects in a variety of domains, including defense, medical, financial and insurance.

You can read more at his website.