Introduction

Much has been written on the topics of test planning and test design, however not as much is written about test execution. Perhaps that is because there are so many ways testing can actually be performed that it is difficult to give adequate coverage of the topic.

In this article, I present some approaches and key considerations for software test execution with the hope that you can assess your own situation and adapt these for your own use.

Key Principles

When teaching software testing, I start with principles because they form the foundation of techniques and approaches. It does little good to learn a technique only to miss why that technique is used or not used in a given situation. This gap leads to ineffective and inefficient tests, and therefore, to missed defects and missed release dates.

Principle #1 – You are testing more than just software.

Several things must work together to allow the software to be tested, such as hardware, related components, networking, data, people and procedures. You are actually testing at least a portion of the system that houses the software.

For an accurate test, all of these items must be under configuration management. This means you must know and maintain control of versions, identifiers, and anything else that must be in sync.

Principle #2 – You must have a way to control a test.

Test control involves several things, such as knowing when to start a test (entry criteria), when to stop a test (exit criteria), what to measure and where to get the measurements, and knowing when and where to make adjustments.

Principle #3 – Care must be taken as tests can cause unintentional damage.

This principle applies to more than just software testing. Recall that the Chornobyl meltdown was started by a test that went badly!

A big part of test execution is ensuring you have protected other system components from harm. This is a primary reason that test environments are so important, and also the reason that testing in production is risky.

Principle #4 – Performing the test will often reveal tests that should be added, changed and/or re-prioritized.

The discovery of tests that should be changed, added, re-prioritized, and perhaps deleted is an added value of test execution. In fact, it is often only in test execution that such things can be learned and adjustments made accordingly.

Principle #5 – Your actual test may look much different from the planned test.

Test planning is great. However, just keep in mind as with any plan, things happen that can require re-planning. For example, new features may be requested, or the timeframe for testing may be shortened. Your best tester might get sick or leave. That's why a good test plan should also have "Plan B" and perhaps even "Plan C."

Principle #6 – Your test is only as good as the test environment.

If your test environment is not a good representation of the target environment, your test results will be untrustworthy. For example, if your test data only contains certain test conditions, but not all conditions, you may well miss some important conditions.

That leads to another important principle.

Principle #7 – Exhaustive testing is impossible

While we would love to have test data with all possible conditions and condition combinations, the sheer magnitude of such data makes this impossible except in trivial cases.

Another example is hardware and systems. You might find it impossible to replicate all possible platforms your applications will need to run upon. There are some ways to help achieve device coverage, such as beta testing, but that falls short simply because you have no control over what is being tested or not tested.

Principle #8 – Static testing can be more effective than dynamic testing.

If done correctly, static testing, whether by tools or humans, can find many more defects in a shorter time period than performing repeated cycles of test/fix/test.

One reason for this is in static testing, such as in an inspection, you have the chance to see all the code (or, perhaps, a major section of the code) all at once. So instead of finding five failures due to defects one at a time, you get to see the actual defects all at once.

In my experience with walkthroughs, reviews and inspections, it is not uncommon to find over ten issues per review that might take an hour or less. In dynamic test execution, it would take many hours to run ten cycles of testing.

Plus, with static testing, you also have opportunity to find defects early, such as in requirements, user stories, use cases, and so forth.

Two Major Approaches to Test Execution

As we look at test execution, we need to make the distinction between the two most basic test approaches, which are manual testing and automated testing.

These two approaches are totally different in nature, even to the point of some people asserting that automated testing is not really testing at all. To be clear, I consider automated testing as "testing", but at times it can feel like you are coding instead of testing.

And, yes, when you spend several hours trying to get a tool to actually interact with an object it can cause you to think, "I could have tested this thing manually about a thousand times!".

I would suggest that learning occurs in the test implementation process, both manual and automated. That is why some of the early testing authors considered test analysis and design part of test execution. You can find defects even during test analysis and design!

Manual testing is still alive and well and I think it will always be needed. I work with test automation every day and there are times when I must perform a test manually. For example, to create test automation, I must understand how the item under test behaves first. Another example is when the test automation script fails, I need to see what has changed.

I have seen cases where a manual test revealed issues the tool totally missed. This was not due to scripting issues, but simply not being able to interact with an object the same way a human would interact with it.

The reality is that even though it may appear that the great majority of people are successful with test automation to conduct most of their tests, the reality is that a lot of manual testing is still being performed for whatever reason.

Another common thing is that organizations often have a hybrid of both manual and automated testing. Manual testing for new changes and new features, while automation can be used for regression tests, smoke tests and similar.

Some test automation such as build verification tests (BVT), CI tests, and smoke tests are lighter than regression tests. Unfortunately, too many people consider these tests sufficient as regression tests, which they are not.

Manual Test Methods

These methods include:

Scripted or pre-defined tests

Pre-defined tests can be based on a specification or an understanding of the item to be tested. Test scripts are very procedural in nature, but do not apply in all situations.

For example, in testing a Graphical User Interface (GUI), you may want to test a search field. You have conditions to test, such a full search, wild-card search and so forth, but the sequence of the tests isn't important.

A big advantage of pre-defined tests is that you know in advance what should be tested and you can measure your test coverage based on test traceability.

The downsides of pre-defined tests are:

- You may miss tests that are not in the test basis (requirements, etc.).

- You may not fully understand all the expected results, especially when the test basis is ambiguous.

- It takes time to define and implement the tests.

- You must maintain the tests, such as when the item's behavior changes.

Exploratory tests

Exploratory tests are just as they sound – you are exploring an application to learn and test at the same time.

Key skills needed in exploratory testing are:

- Critical thinking

- Observation

- Knowledge of defect behavior

One major way exploratory testing is conducted is Session-Based Testing (SBT). In SBT, testing is focused on a particular aspect of the item under test and performed in short, defined windows of time, such as one to two hours or less.

SBT provides a way to manage and measure exploratory testing with very tangible outcome reporting.

Automated Test Methods

As mentioned above, test automation is a fundamentally different way of performing testing.

Basically, test automation is software testing software and there are various ways this can be accomplished.

Scripted (high coding effort)

Highly scripted test automation is basically "scripts from scratch", or at least scripts based on a previous test or template.

Scripted test automation can be very labor-intensive to create, but the investment is often front-end loaded. However, maintenance can be high when applications are highly dynamic and subject to change.

Capture/playback (low code effort)

While often labeled as "low code" or "no code" tools, the reality is that many times someone will need to create or modify the scripting to achieve the desired test.

These tools do have value in that testers can create initial tests very quickly. Depending on the specific tool, even more complex functions such as data-driven tests can be achieved with greater ease than in manual scripted approaches. Just keep in mind that nobody escapes the maintenance of test automation!

Pros and Cons of Manual and Automated Testing

Here are some things to understand about manual and automated testing.

Manual testing benefits

You can observe actual human-based software and system behavior in a variety of external conditions (light, dark, dry, damp, etc.) that would otherwise not be possible using only test automation. You can also see how the software behaves in low response time situations or slow user access time.

The ability to assess usability issues is also a big advantage of manual testing. You get to experience the software under test the same as a real user would. The key here is to try to keep a user mindset as opposed to a mindset more familiar with the application.

Manual testing drawbacks and risks

- Manual testing can get tedious and boring. This is not always the case, but consider the tests that are the same time after time. These are non-exploratory tests and usually pre-defined verification tests.

- There is the possibility of human error in performing pre-defined testing. For example, the test case might indicate to press F4, but you get the "fat finger syndrome" and press F5 instead. Or perhaps, you simply miss seeing an outcome that is indicative of a possible defect.

- Manual testing typically takes longer to perform. When the deadline looms, people may reduce the testing effort to meet the release date. Test automation doesn't get tired, and once created can execute faster than manual tests. There are exceptions to this, but test automation should run faster than manual tests. If they don't, refactoring of the tests is usually needed.

Automated testing benefits

Test automation can help eliminate manual errors. However, keep in mind that automation test scripts and the tools that run them can have bugs in them.

Test automation can give leverage on very large volumes of test data. For example, if you need to generate thousands of rows of test data, or compare the results of a one-hundred page report against a baseline, a tool makes the job much easier!

Automated testing drawbacks and risks

- Unrealistic expectations on the part of everyone, but especially management, can lead to high disappointment if the test automation effort stalls or fails.

- It takes significant time to implement a reasonably significant set of automated tests. It takes even longer to see a positive return on investment.

- The maintenance requirement of test automation has always been one of the greatest challenges. This is not necessarily a tool issue. Maintenance is most often driven by application changes. Also, if applications are not developed with maintainability in mind (such as not using object identifiers), then test automation is almost forced to rely on more fragile means of locating objects, such as Xpath, CSS, or X/Y coordinates. However, how you architect and design your test automation can have a big impact on how easy or hard maintenance becomes.

- In the case of a commercial tool, the vendor may go out of business, sell the tool to another vendor, reduce support, and so on.

- An open source test tool may die due to lack of contribution or other reasons.

When Test Execution Occurs – The Software Development Lifecycle (SDLC) and the Software Testing Life Cycle

The SDLC should define when testing occurs. The problem is, most people can't fully describe the SDLC they are using.

For right now, let's just distinguish between the two most basic lifecycle models: the sequential and the iterative.

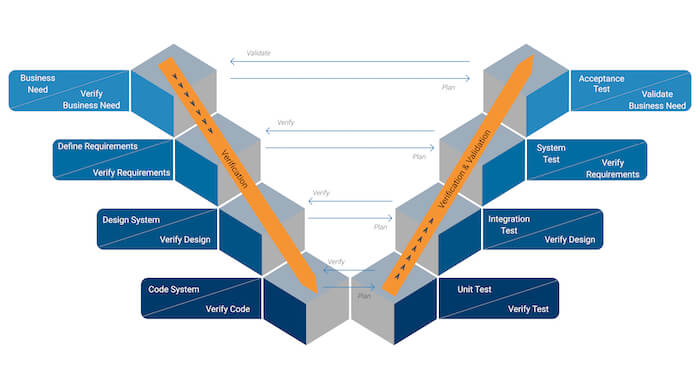

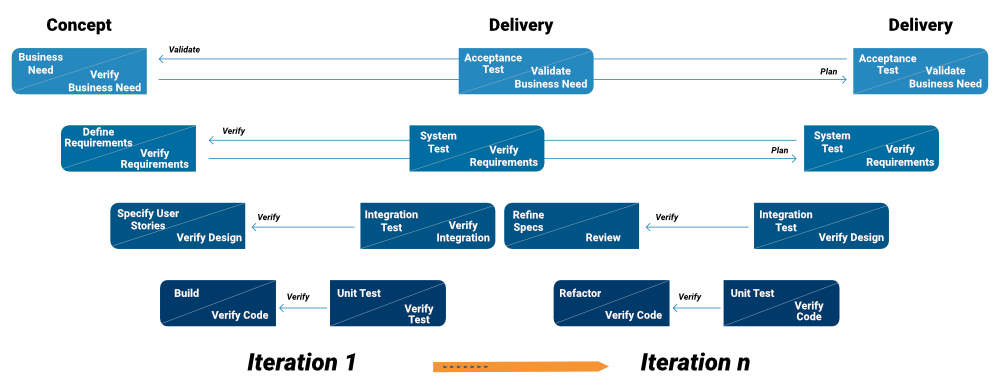

In the sequential lifecycle model, testing often occurs toward the end of the lifecycle in system testing or acceptance testing activities. Testing can also occur during development in a unit and integration test activities. It is not uncommon for static testing such as reviews to occur during requirement development, system design, and coding activities (Figure 1).

Figure 1 – My Representation of the V-Diagram.

There are many depictions of the V diagram and this is how I prefer to show it. (Rice and Perry, "Surviving the Top Ten Challenges of Software Testing")

In iterative lifecycle models, testing often occurs in shorter time frames during each increment of development or maintenance. Interestingly, when you examine the work that actually occurs in an increment, it closely resembles a "mini V" diagram.

When seen as a series of small V iterations, it might look something like shown in Figure 2. Prior to the iterations, requirements may be defined. After the last iteration, higher levels of testing can be performed before deployment.

Depending on the status of the iteration quality, deployment can occur after any iteration.

Figure 2 – Testing in Iterations Seen in Mini Vs.

Levels of Testing

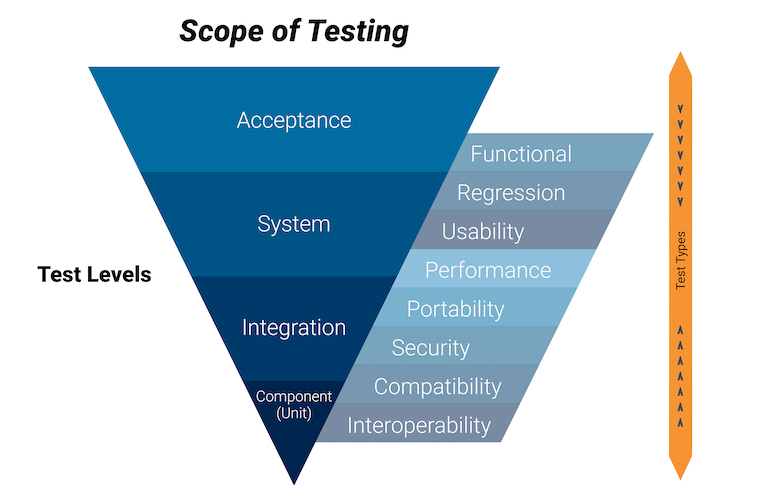

Something that becomes apparent when we look at the lifecycle view of testing are the levels of testing. Test levels often are based on scope, but they can also be driven by when they occur.

In Figure 3, we see a view of the test levels that resembles a funnel, with the smallest tests (unit tests) at the bottom and the largest scope of testing (system and acceptance testing) at the top. This is often the view of what is known as "top down" testing.

There is no prescribed order in the funnel, so you can perform unit tests first if desired. However, there are situations when you almost have to start at the larger tests, such as user scenarios, then if failures are seen, work down to the unit test level in the debugging process.

Figure 3 – Testing Funnel.

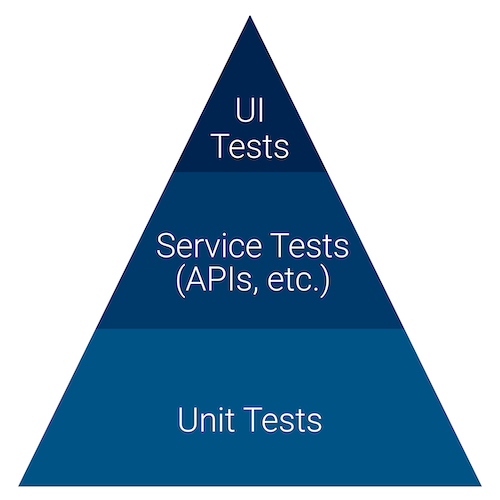

In Figure 4, we see a different view, often referred to by agile testers as the "Testing Pyramid", which is based on the quantity of tests in a "bottom-up" approach to testing. In this very simplified view, the unit tests are more numerous and more automated and the user-oriented tests are fewer and more manual in nature.

Like the V-diagram, there are many variations on the testing pyramid.

It is important to understand that the testing pyramid is based on the assumption that you are building the application or system. Therefore, you have the opportunity to test from the bottom up.

This is not always the case, such as seen in purchased software, or software that you only get to test once it is delivered to you. In that case, the top-down approach (funnel) is often faster and more feasible.

Figure 4 - Testing Pyramid.

The Role of the Test Plan and Test Objectives in Test Execution

One of the great values of a test plan is that it can describe what should be achieved during a period of testing. A major way that this is described is by test objectives.

A test objective is simply a statement of what should be tested. Test objectives are very important as they form the scope of testing. If there are too many test objectives, you risk not being able to complete the test. If there are too few test objectives, you risk not having enough confidence in the test because too few things were tested.

How to Know When to Start and Stop Testing – The Role of Entry and Exit Criteria

Two big questions in testing are, "When do I start?" and "When do I stop?"

A great way to define these starting and stopping points is with entry and exit criteria. These criteria should be defined in a test plan.

Entry criteria are simply the things that should be in place before testing begins. Examples are:

- The application has passed a previous review or test

- The test environment is properly configured

- Tests have been designed to achieve desired coverage

- A risk analysis has been performed for the item to be tested

The major reason entry criteria are needed is to avoid testing something before it is ready. Yes, early testing is good, but testing too early can be counter-productive.

Exit criteria are the outcomes that must be seen to consider testing complete. Examples are:

- There are no unresolved major defects

- Desired coverage levels have been achieved

- All moderate and high risks have been mitigated

When to Make Adjustments in the Testing Effort

It is very common to make adjustments to testing during test execution. This can occur for many reasons, but some of the more common reasons are:

- Too many or too few failures being seen

- Tests that may be deemed to be weak or ineffective

- Tests that may be considered to be inconclusive, too long or too complex

- The basis of tests may have changed, such as new features added

Reporting Your Test Results

While test reporting may seem like just more paperwork, it is actually one of the most important activities in testing. It is in test reporting that all we do in testing becomes visible to management and everyone else.

Test reporting documents what you tested, when you tested something, and what you learned from the test. Good test reporting has saved many a tester when someone says they were never informed about an issue!

Test reporting can take the form of status reports and final reports, as well as incident reports.

Dashboards and Metrics

A big part of the testing story can be told visually through dashboards. Good dashboards are:

- Simple and usable – Not too many items and easy to read

- Meaningful and focused – Contains the right metrics

- Current at all times – Does not require manual effort to update.

If you would like to know more about software testing metrics and dashboards, please see this article.

Conclusion

Test execution can be seen in many ways. How you perform testing depends on your lifecycle, your organization, the risk, the tools you have, and the skills possessed by the testers.

Test execution must be managed and controlled based on the information you are receiving from the testing effort. Like driving a car, the testing dashboard tells you what you need to know to arrive at your destination.

The concepts in this article can help you define a workable and effective framework for your own software test execution.