Coordinating your automated and manual testing

Do you have tests that you still run manually, even though they are already automated?

If you do, you are not alone. Even in companies with mature automation processes, some QA teams still “find reasons” to run part of their automatic test cases manually…

The most common “reasons” for this strange behaviour are:

- “We only run automatic tests manually if they are very important”- this contradicts the fact that the tests usually automated are chosen based on their importance and significance in the first place.

- “We don’t entirely trust your automation”. It is true that creating good automation tests is no trivial task, but if you have already invested time and efforts in automation you might as well make sure it is done right so you can count on it in full and not waste your time or money.

- “We’re not sure what is covered by automation and what is covered by manual tests”. This is common when you have two separate teams: one for automation and the other for manual testing.

Why do you need both Automated and Manual testing?

Nowadays it is rare to find any company that does not have a blend of both automated and manual testing frameworks. When the combination is successful it is because Manual testing and Automated testing don’t diminish one another, they enhance one another and create more synergetic testing.

Automated testing usually enhances testing speed and consistency, but it is only as good as the scripts you wrote. Manual testing compliments the automatic testing process to discover issues from the user’s perspective, or unexpected bugs from unscripted scenarios etc. There is much need for human testing heuristics alongside efficient automation.

Some examples of the successful combination of automated and manual testing are when you use a blend of these tests to cover different aspects of the same feature; or when manual tests pick up where automation ended; or when there are test that are only “semi-automatic” and need manual intervention between testing to continue to the next automation set of tests.

Managing manual and automated testing together

Most testing teams today are running some sort of mix between manual and automatic testing, but for some reason we also see that many of these organizations manage these efforts separately. By isolating the management and reporting of their testing based on type, they make it harder for stakeholders to get a complete overview of their products and projects, losing a lot of the value they were aiming to get from their automation in the first place

The new reality of Agile & DevOps dictates a new approach where testing leaders need to coordinate testing efforts across all testing tools and approaches, starting from manual testing (scripted and exploratory), via the team's functional automated efforts, all the way through unit and integration tests running by CI/CD framework.

The following recommendations are based on hundreds of case studies and past experiences of PractiTest’s clients, along with the finding of the State of Testing report.

Why is automation and manual test management interesting right now?

Three main changes have brought about the need for combined and efficient manual and automated test management.

1. The first change involves the adoption of automation practice and tools. According to the State of Testing Report of testers are now handling some level of test automation . This explains the boom in test automation facilitating tools, to align with the prominent adoption of automated testing efforts, across all industries.

The growing diversity of test automation tools has also brought down their cost while increasing their ease of use. “Codeless automation” and other buzzwords, attest to the trend of creating friendlier user experiences for the less code inclined, and those wishing to incorporate test automation. These test automation tools are becoming more and more robust as well.

2. The second change has to do with shifts in the Dev & Testing Processes. The prevailing shifts we are seeing in the development and testing process include:

Agile - based on shorter cycles requiring constant stability

CI - based on the idea of constantly building and testing the system

And DevOps - based on testing of the deployment process and production environments, while not only limited to Development anymore.

All of the above derive from the growing demand to speed things up and enable faster releases.

3. The third change we are seeing has to do with the “players” involved in testing. The State of Testing report indicates that testing teams are shrinking. And even diverse teams have fewer testers within them. However, testers are also expected or even required to have some scripting capabilities (83% of respondents stated that functional testing automation and scripting are nowadays important skills to have, and something that managers look for when hiring.

On the flip side of this, developers are gradually taking a more active role in testing tasks. In some cases we witness other teams in the organization taking part in testing.

Is test automation replacing manual testing?

Not in the near future.

Even though automated testing is on the rise, this does not mean that manual testing is going to disappear. In fact, each type of testing serves a different function in the QA process, and together they, in some way, complement each other. To understand this, let us quickly outline the important differences between Manual and Automated tests.

The difference between manual & automated tests

Manual and Automated tests differ from one another based on three main points:

- Purpose of the Tests:

Manual tests are meant to evaluate the product to ensure desired quality, value, fit, etc & to find bugs and areas to improve. Automated tests are meant to maintain the product’s functional and non-functional stability & detect changes that may point to issues. It is a fine line between the two, but the distinction is important to accept. - Nature of the Tests:

While Manual tests are (relatively) inexpensive to write, they are expensive to run (hiring & training tester, equipment, time etc.). As opposed to Automated tests which are (relatively) expensive to write, but then inexpensive to run.

Other differentiators include:

Run duration- which is often longer for manual tests, while shorter for automated ones.

Coverage level- manual tests often cover higher level requirements and functions, and are more flexible to edit and adapt. Automated tests on the other hand often cover lower level matters and are inflexible (unless re-written).

Test results - with Manual tests results directly dependent on the tester performing them. Whereas, automated tests are independent from the tester, and any “human subjectivity”.

Growth and change - Manual tests tend to grow, but slowly with the product as required. Automated tests on the other hand, tend to grow constantly in numbers with time. - Runs & Results:

No. of runs - Manual tests typically have a small(er) number of runs and repetitions compared to automated tests.

Test execution - Obviously manual tests are distributed and performed by the QA team members. Whereas, automated tests are run on automation frameworks.

Issue reporting - Manual failed tests are usually reported as bugs directly. With automated tests, finding the sources of issues often requires analysis and review.

Point of interest - With Manual tests interest resides in the direct results, while with autned tests, interest resides in trends and benchmarks.

Manual Testing = Evaluation & Discovery

|

Common misconceptions about automated testing projects

Before moving forward, with how to manage manual & automated testing together, there are a few misconceptions to address and acknowledge. Automated testing is not the next evolutionary step in testing! Also, adding automated tests is not a magical solution to everything (not everything can or should be automated). Here’s why… and an example.

Often, when people think of test automation they envision something like this:

test planning > manual testing > automated tests > runs forever > magically reports. |

Supposedly you’ll start with the test planning process and then generate a lot of manual test cases up front. Then you start thinking about those manual test cases and you select a few of them that you want to automate. After eventually automating all of your manual test cases, you believe those test cases can just run forever without any efforts. or anything that you need to do. Then magically you get reports from that and your stability just flows. Pretty soon you are thinking, this is a seamless way to work, and you don't need manual test cases, as it’s a waste of time to do anything in any other way.

Sounds familiar?

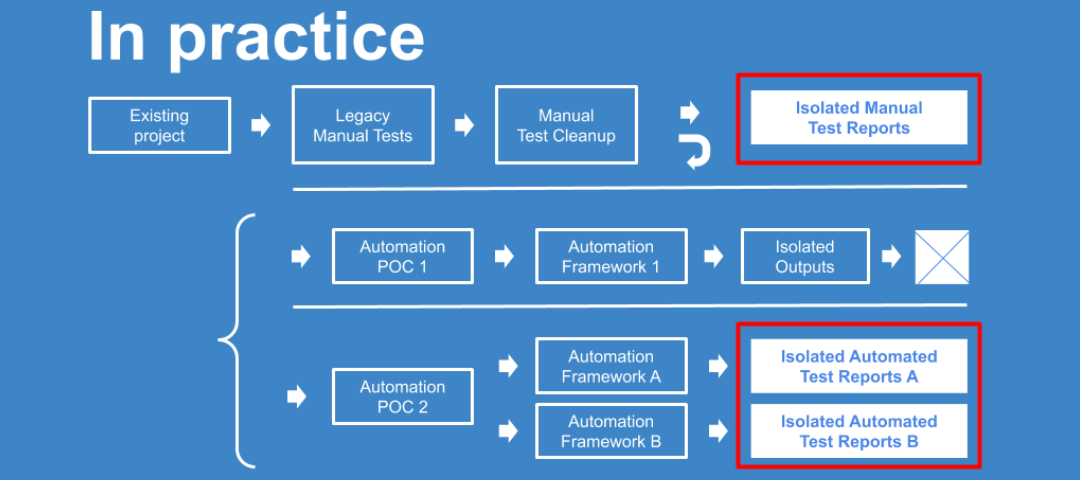

In practice things aren’t that simple.

As an example, take a development manager who says they want to start doing an automation project right now by automating part of their existing test cases.

Usually, most testing projects do not start from scratch. So, the team starts by looking into their existing project and through their legacy of manual test cases and realize there's a very big gap between the existing manual test cases and the ones they need. Clean up of the manual test cases begins and at this point they’ll have manual test reports that are good, but again they're only for manual test cases.

As mentioned they want to introduce automation….

So they open up a separate project and go ahead and automate at least some of the stuff that is running right now, along with an automation POC (proof of concept).

Then they try a framework because they thought it would be the best one to use or maybe, someone had experience with this or that framework, but they are running with another, so then they try to work with both...

Working with the new framework and their outputs is actually really hard. The project gets installed while they continue to try to work on that for a couple years until they realize that they’ve just hit a wall!

Now they have some isolated manufacture reports, a little bit of automation but it's not actually enough in order to be satisfied. So they will start another POC and will leave the first test cases they had in there because they had already invested in them, and why not run them every night.

By the second POC they understand that one of the main issues was that separated the frameworks and took two or three different tools to do the automation: one for api level, one for ui level, maybe one for unit test level, and they ran them, believing their second POC was successful.

However...

They now have separate reports for their manual and automation frameworks and that becomes the new issue.

Because even if they are successful using separate frameworks reporting on separate areas, it is quite a lot of work, if at all possible, to try and make sense of it all when trying to communicate what's going on outward.

This is just one example of one of the fundamental challenges of managing manual and automated testing side-by-side.

The Challenges of Managing Manual & Automated Testing Together

When testers were asked, as part of the State of Testing survey, what their biggest challenges affecting test management is communicating with their teams, it was no surprise that 50% stated it was integrating manual and automation testing results, and generating testing results’ visibility. This challenge of managing “apples and oranges” stems from the core differences between manual and automated tests.

- Very different entities

Automated tests are usually deeper and more specific, while Manual tests are usually more extensive and less defined. Both test types have a variety amongst themselves. Such as Scripted vs. Exploratory / Regression vs. New Funct. with manual testing, and GUI vs. API vs. Performance with automation (just to name a few). - Different scale of tests and runs

There are usually many more automated tests than manual, especially when you add unit tests. There are also many more automated runs than manual ones, as they run constantly (sometimes daily or continually). - Different timelines for results

Automated tests can complete full cycles in minutes / hours, while Manual results may take hours / days to be completed - Different approach to analyse results

Manual run “fails” usually signal bugs, and there is also some kind of correlation between the number of failed tests and the number of bugs found. Automated tests have many more false negatives, and there are usually many more failed automated tests than bugs found by them.

Tips for managing manual and automatic tests together

In order to reach full coverage and efficiency potential, manual and automated tests should be managed together, properly. Here are some top tips, based on experience, to achieve just that.

-

Architect and Manage your testing efforts

Have one person or a small group orchestrating and coordinating testing efforts across the team. If no one is assigned responsibility, it won’t happen, simple as that.Parallel to that, define test management coordination as a shared goal, and advance it just as you would do any feature or aspect of your product, including success benchmarks to monitor your efforts.

Finally, ensure there is team-wide visibility and legitimacy to the tasks related to testing and test coordination. This can be achieved with various test management tools, but be sure to make sure they have advanced shared dashboards and reports that provide this cross test visibility.

-

Balance between distribution and centralization

It is OK to use a number of tools and platforms to cover your testing needs. There are many systems that integrate with one another: bug trackers, automation frameworks, dev. Management etc.. The tool you use for running a test is usually not the best tool to manage the testing process. (there are exceptions of course). When possible try to centralize at least the results of the tests, and if possible management. -

Not all your tests are comparable but some might be surprisingly so...

Most times there is no real option to create ONE coherent metric or dashboard to showcase everything. However, you may find tests with comparable parameters across different test types. These are definitely worth aggregating into a representative report. And It is OK if you have different ways of reporting the status of a test based on its nature and type. - Be consistent with names and conventions

Use the same names and conventions across your tests, so that team communication improves. If needed place “legends” or explanations in strategic places, but be sure you are all “speaking the same language”. -

Group your tests based on what makes sense

At times it will make sense to group tests and graphs/reports in different ways For example:

- Tests and Runs based on the testers who need to manage and execute the testing

- Graphs and metrics based on the areas of the product or the attributes you are reporting.

There doesn’t need to be a correlation between the test execution and the results distribution schemes. -

Not all results will interest every member of your team

When creating metrics, dashboards and reports, think about who is interested in the information you are working so hard to share. Present it based on their needs and what they should know.It is OK to use the same results in different metrics or dashboards, this can highlight different sides of your results, and some people might be able to relate better to certain types of result representations than others. Since It is virtually impossible to create self explanatory metrics and dashboards, always include a short, clear description/caption of what is being shown. Be especially coherent when working on multiple dashboards.

Final note:

Good management of manual & automated testing is like conducting an orchestra - different tools, playing different tunes, at times at different rhythms, with the shared goal of making something greater than what any individual player can achieve. While it requires precise planning and conducting, the results are often extraordinary!

PractiTest’s Test Management solution orchestrates Manual testing, Exploratory Testing, and Test Automation Management all in one centralized hub of information. You can manage the run and results of your automation tests under the same platform you use to manage your manual testing to ensure full QA coverage and visibility.

Robust built-in integrations provide a streamlined process and improve team communication. And the best part is the ability to view and share real-time test results using advanced customizable reports to make smarter decisions and release better software, faster.